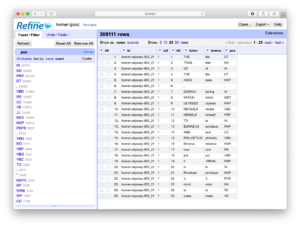

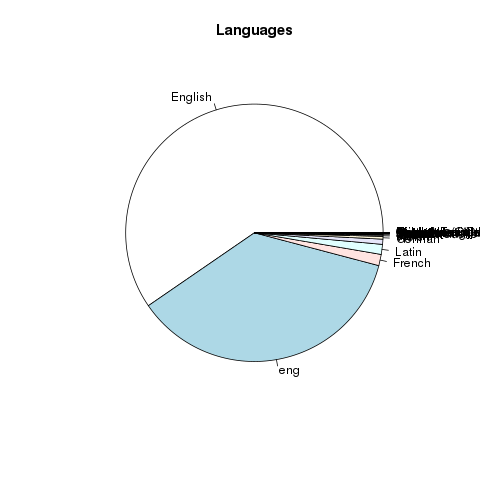

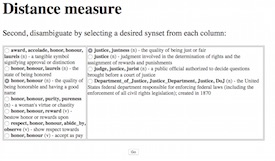

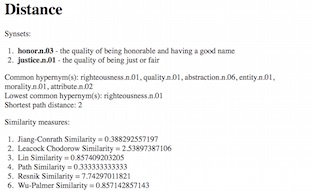

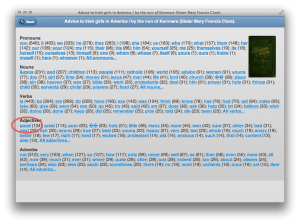

Facets (counts & tabulations) of parts-of-speech

The student, researcher, or scholar can use OpenRefine to open one or more different types of delimited files. OpenRefine will then parse the file(s) into fields. It can makes many things easy such as finding/replacing, faceting (think “grouping”), filtering (think “searching”), sorting, clustering (think “normalizing/cleannig”), counting & tabulating, and finally, exporting data. OpenRefine is an excellent go-between when spreadsheets fail and full-blown databases are too hard to use. OpenRefine eats delimited files for lunch.

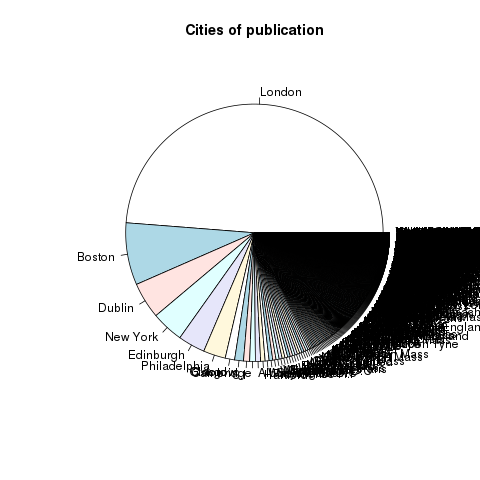

Many (actually, most) of the files in a study carrel are tab-delimited files, and they will import into OpenRefine with ease. For example, after all a carrel’s part-of-speech (pos) files are imported into OpenRefine, the student, researcher, or scholar can very easily count, tabulate, search (filter), and facet on nouns, verbs, adjectives, etc. If the named entities files (ent) are imported, then it is easy to see what types of entities exist and who might be the people mentioned in the carrel:

Like everything else, using OpenRefine requires practice. The problem to solve is not so much learning how to use OpenRefine. Instead, the problem to solve is to ask and answer interesting questions. That said, the student, researcher, or scholar will want to sort the data, search/filter the data, and compare pieces of the data to other pieces to articulate possible relationships. The following recipes endeavor to demonstrate some such tasks. The first is to simply facet (count & tabulate) on parts-of-speech files:

Faceting is a whole like like “grouping” in the world of relational databases. Faceting alphabetically sorts a list and then counts the number of times each item appears in the list. Different types of works have different parts-of-speech ratios. For example, it is not uncommon for there to be a preponderance of past-tense verbs stories. Counts & tabulations of personal pronouns as well as proper nouns give senses of genders. A more in-depth faceting against adjectives allude to sentiment.

This recipe outlines how to filter (“search”):

By combining the functionalities of faceting and filtering the student, researcher, or scholar can investigate the original content more deeply or at least in different ways. The use of OpenRefine in this way is akin to leafing through book or a back-of-the-book index. As patterns & anomalies present themselves, they can be followed up more thoroughly through the use of a concordance and literally see the patterns & anomalies in context.

This recipe answers the question, “Who is mentioned in a corpus, and how often?“:

This final recipe is a visualization:

A study carrel’s parts-of-speech (pos) and named-entities (ent) files enumerate each and every word or named-entity in each and every sentence of each and every item in the study carrel. Given a question relatively quantitative in nature and pertaining to parts-of-speech or named-entities, the pos and ent files are likely to be able to address the question. The pos and ent files are tab-delimited files, and OpenRefine is a very good tool for reading and analyzing such files. It does much more than was outlined here, but enumerating them here is beyond scope. Such is left up to the… reader.

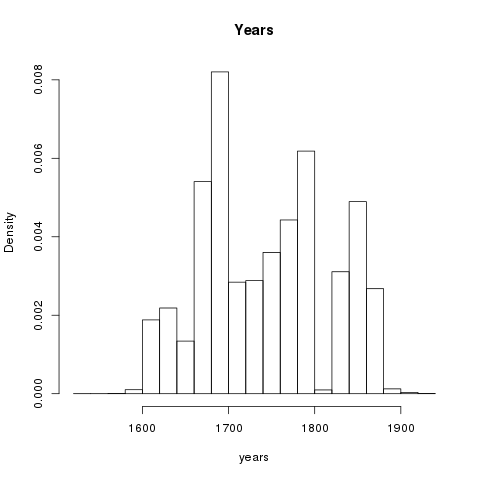

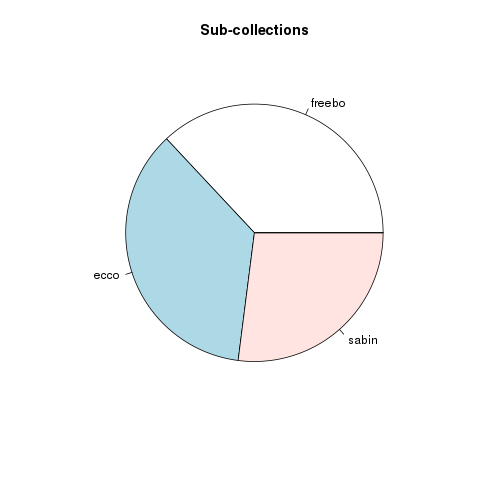

2020-02-09T21:19:33+00:00 Life of a Librarian: Topic Modeling Tool – Enumerating and visualizing latent themes http://sites.nd.edu/emorgan/2020/02/topic-modeling/Technically speaking, topic modeling is an unsupervised machine learning process used to extract latent themes from a text. Given a text and an integer, a topic modeler will count & tabulate the frequency of words and compare those frequencies with the distances between the words. The words form “clusters” when they are both frequent and near each other, and these clusters can sometimes represent themes, topics, or subjects. Topic modeling is often used to denote the “aboutness” of a text or compare themes between authors, dates, genres, demographics, other topics, or other metadata items.

Topic Modeling Tool is a GUI/desktop topic modeler based on the venerable MALLET suite of software. It can be used in a number of ways, and it is relatively easy to use it to: list five distinct themes from the Iliad and the Odyssey, compare those themes between books, and, assuming each chapter occurs chronologically, compare the themes over time.

These few recipes are intended to get you up and running when it comes to Topic Modeling Tool. They are not intended to be a full-blown tutorial. This first recipe merely divides a corpus into the default number of topics and dimensions:

This recipe will make things less confusing:

There is no correct number of topics to extract with the process of topic modeling. “When considering the whole of Shakespeare’s writings, what is the number of topics it is about?” This being the case, repeat and re-repeat the previous recipe until you: 1) get tired, or 2) feel like the results are at least somewhat meaningful.

This recipe will help you make the results even cleaner by removing nonsense from the output:

Adding individual words to the stopword list can be tedious, and consequently, here is a power-user’s recipe to accomplish the same goal:

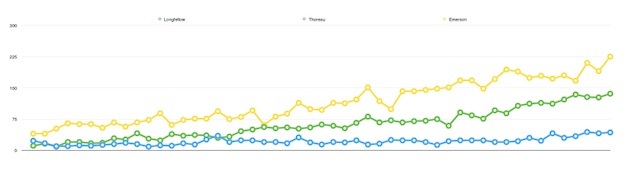

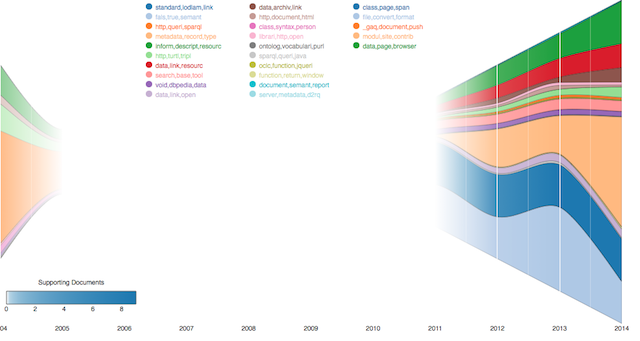

Now that you have somewhat meaningful topics, you will probably want to visualize the results, and one way to do that is to illustrate how the topics are dispersed over the whole of the corpus. Luckily, the list of topics displayed in the Tool’s console is tab-delimited, making it easy to visualize. Here’s how:

Because of a great feature in Topic Modeling Tool it is relatively easy to compare topics against metadata values such as authors, dates, formats, genres, etc. To accomplish this goal the raw numeric information output by the Tool (the actual model) needs to be supplemented with metadata, the data then needs to be pivoted, and subsequently visualized. This is a power-user’s recipe because it requires: 1) a specifically shaped comma-separated values (CSV) file, 2) Python and a few accompanying modules, and 3) the ability to work from the command line. That said, here’s a recipe to compare & contrast the two books of Homer:

python pivot.py); the result ought to an error message outlining the input pivot.py expectspivot.py again, but this time give it input; more specifically, specify “./model/output_csv/topics-metadata.csv” as the first argument (Windows users will specify .\model\output_csv\topics-metadata.csv), specify “barh” for the second argument, and “title” as the third argument; the result ought to be a horizontal bar chart illustrating the differences in topics across the Iliad and the Odyssey, and ask yourself, “To what degree are the books similar?”The following recipe is very similar to the previous recipe, but it illustrates the ebb & flow of topics throughout the whole of the two books:

pivot.py and specify “./model/output_csv/topics-metadata.csv” as the first argument (Windows users will specify .\model\output_csv\topics-metadata.csv), specify “line” for the second argument, and “title” as the third argument; the result ought to be a line chart illustrating the increase & decrease of topics from the beginning of the saga to the end, and ask yourself “What topics are discussed concurrently, and what topics are discussed when others are not?”

Topic modeling is an effective process for “reading” a corpus “from a distance”. Topic Modeling Tool makes the process easier, but the process requires practice. Next steps are for the student to play with the additional options behind the “Optional Settings…” dialog box, read the Tool’s documentation, take a look at the structure of the CSV/metadata file, and take a look under the hood at pivot.py.

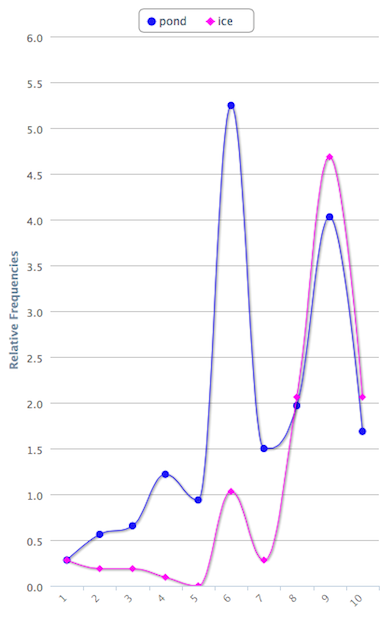

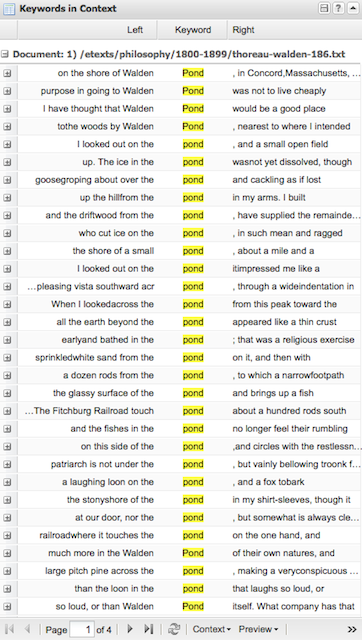

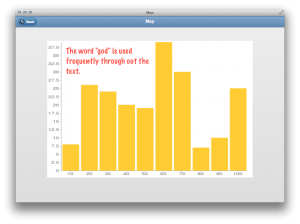

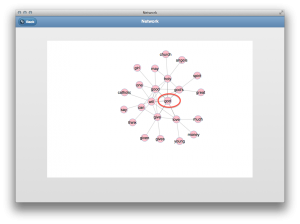

Concordancing is really a process about find, and AntConc is a very useful program for this purpose. Given one or more plain text files, AntConc will enable the student, researcher, or scholar to: find all the occurrences of a word, illustrate where the word is located, navigate through document(s) where the word occurs, list word collocations, and calculate quite a number of useful statistics regarding a word. Concordancing, dating from the 13th Century, is the oldest form of text mining. Think of it as control-F (^f) on steroids. AntConc does all this and more. For example, one can load all of the Iliad and the Odyssey into AntConc. Find all the occurrences of the word ship, visualize where ship appears in each chapter, and list the most significant words associated with the word ship.

This recipe simply implements search:

The result ought to be a list of phrases where the word of interest is displayed in the middle of the screen. In modern-day terms, such a list is called a “key word in context” (KWIC) index.

This recipe combines search with “control-F”:

This recipe produces a dispersion plot, an illustration of where a search term appears in a document:

The result will be a list of illustrations. Each illustration will include zero or more vertical lines denoting the location of your search term in a given file. The more lines in each illustrations, the more times the search terms appear in the document.

This recipe counts & tabulates the frequency of words:

It is quite probable the most frequent words will be “stop words” like the, a, an, etc. AntConc supports the elimination of stop words, and the Reader supplies a stop word list. Describing how to implement this functionality is too difficult to put into words. (No puns intended.) But here is an outline:

Ideas are rarely articulated through the use of individual words; ideas are usually articulated through the use of sets of words (ngrams, sentences, paragraphs, etc.). Thus, as John Rupert Firth once said, “You shall know a word by the company it keeps.” This recipe outlines how to list word co-occurrences and collocations:

Again, a word is known by the company it keeps. Use the co-occurrences and collocations features to learn how a given word (or phrase) is associated with other words.

There is much more to AntConc than outlined in the recipes outlined above. Learning more is left up to you, the student, research, and scholar.

2020-01-31T20:02:21+00:00 Life of a Librarian: The Distant Reader Workbook http://sites.nd.edu/emorgan/2020/01/workbook/I am in the process of writing a/the Distant Reader workbook, which will make its debut at a Code4Lib preconference workshop in March. Below is both the “finished” introduction and table-of-contents.

This workbook outlines sets of hands-on exercises surrounding a computer system called the Distant Reader — https://distantreader.org.

By going through the workbook, you will become familiar with the problems the Distant Reader is designed to address, how to submit content to the Reader, how to download the results (affectionately called “study carrels”), and how to interpret them. The bulk of the workbook is about the later. Interpretation can be as simple as reading a narrative report in your Web browser, as complex as doing machine learning, and everything else in-between.

You will need to bring very little to the workbook in order to get very much out. At the very least, you will need a computer with a Web browser and an Internet connection. A text editor such as Notepad++ for Windows or BBEdit for Macintosh will come in very handy, but a word processor of any type will do in a pinch. You will want some sort of spreadsheet application for reading tabular data, and Microsoft Excel or Macintosh Numbers will both work quite well. All the other applications used in the workbook are freely available for downloading and cross-platform in nature. You may need to install a Java virtual machine in order to use some of them, but Java is probably already installed on your computer.

I hope you enjoy using the Distant Reader. It helps me use and understand large volumes of text quickly and easily.

I. What is the Distant Reader, and why should I care?

A. The Distant Reader is a tool for reading

B. How it works

C. What it does

II. Five different types of input

A. Introduction

B. A file

C. A URL

D. A list of URLs

E. A zip file

F. A zip file with a companion CSV file

F. Summary

III. Submitting "experiments" and downloading "study carrels"

IV. An introduction to study carrels

V. The structured data of study carrels; taking inventory through the manifest

VI. Using combinations of desktop tools to analyze the data

A. Introduction - The three essential types of desktop tools

B. Text editors

C. Spreadsheet/database applications

D. Analysis applications

i. Wordle and Wordle recipes

ii. AntConc and AntConc recipes

iii. Excel and Excel recipes

iv. OpenRefine and OpenRefine recipes

v. Topic Modeling Tool and Tool recipes

VII. Using command-line tools to dig even deeper

VIII. Summary/conclusion

IX. About the author

As per usual these days, the “code” is available on GitHub.

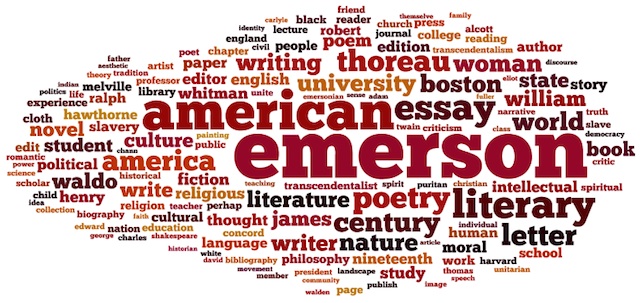

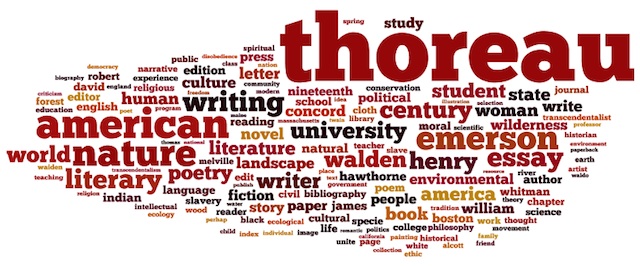

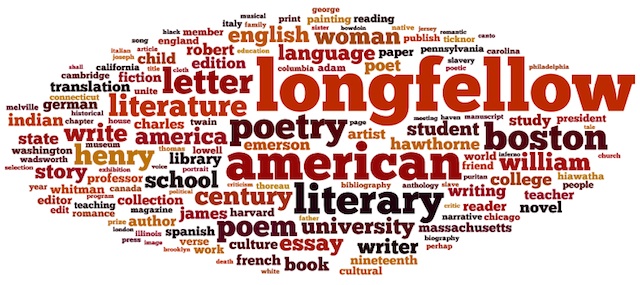

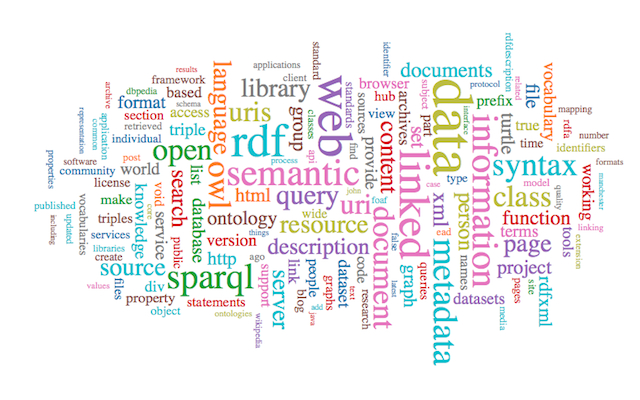

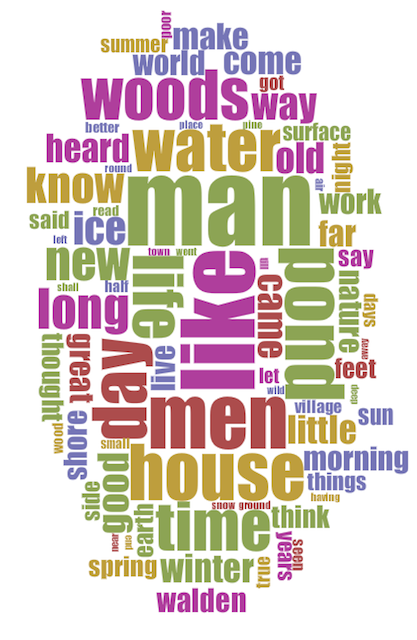

2020-01-31T18:57:29+00:00 Life of a Librarian: Wordle and the Distant Reader http://sites.nd.edu/emorgan/2020/01/wordle/Visualized word frequencies, while often considered sophomoric, can be quite useful when it comes to understanding a text, especially when the frequencies are focused on things like parts-of-speech, named entities, or co-occurrences. Wordle visualizes such frequencies very well. For example, the 100 most frequent words in the Iliad and the Odyssey, the 100 most frequent nouns in the Iliad and the Odyssey, or the statistically significant words associated with the word ship from the Iliad and the Odyssey.

Here is a generic Wordle recipe where Wordle will calculate the frequencies for you:

Congratulations, you have just visualized the whole of your study carrel.

Here is another recipe, a recipe where you supply the frequencies (or any other score):

Notice how you used a variety of generic applications to achieve the desired result. The word/value pairs given to Wordle do not have be frequencies. Instead they can be any number of different scores or weights. Keep your eyes open for word/value combinations. They are everywhere. Word clouds have been given a bad rap. Wordle is a very useful tool.

2020-01-29T18:13:33+00:00 Life of a Librarian: The Distant Reader and a Web-based demonstration http://sites.nd.edu/emorgan/2020/01/dr-ucla/The following is an announcement of a Web-based demonstration to the Distant Reader:

Please join us for a web-based demo and Q&A on The Distant Reader, a web-based text analysis toolset for reading and analyzing texts that removes the hurdle of acquiring computational expertise. The Distant Reader offers a ready way to onboard scholars to text analysis and its possibilities. Eric Lease Morgan (Notre Dame) will demo his tool and answer your questions. This session is suitable for digital textual scholars at any level, from beginning to expert.

- When: February 12, 2020 @ 1-2pm Pacific Standard Time

- Where: Online (https://ucla.zoom.us/j/3107947789) or at UCLA in 1041 Public Affairs Building

The Distant Reader: Reading at scale

The Distant Reader is a tool for reading. It takes an arbitrary amount of unstructured data (text) as input, and it outputs sets of structured data for analysis — reading. Given a corpus of just about any size (hundreds of books or thousands of journal articles), the Distant Reader analyzes the corpus, and outputs a myriad of reports enabling the researcher to use and understand the corpus. Designed with college students, graduate students, scientists, or humanists in mind, the Distant Reader is intended to supplement the traditional reading process.

This presentation outlines the problems the Reader is intended to address as well as the way it is implemented on the Jetstream platform with the help of both software and personnel resources from XSEDE. The Distant Reader is freely available for anybody to use at https://distantreader.org.

Other Distant Reader links of possible interest include:

- “study carrels” – http://carrels.distantreader.org

- blog postings – http://sites.nd.edu/emorgan/category/distant-reader/

- Slack channel – http://bit.ly/distantreader-slack

- Twitter feed – http://twitter.com/readerdistant

- source code – https://github.com/ericleasemorgan/reader

‘Hope to see you there?

2020-01-18T00:07:40+00:00 Life of a Librarian: Distant Reader “study carrels”: A manifest http://sites.nd.edu/emorgan/2019/12/reader-manifest/The results of the Distant Reader process is the creation of a “study carrel” — a set of structured data files intended to help you to further “read” your corpus. Using a previously created study carrel as an example, this blog posting enumerates & outlines the contents of a typical carrel. A future blog posting will describe ways to use & understand the files outlined here. Therefore, the text below is merely a kind of manifest.

Wall Paper by Eric

The results of downloading and uncompressing the Distant Reader study carrel is a directory/folder containing a standard set of files and subdirectories. Each of these files and subdirectories are listed & described below:

[1] Airivata – https://airavata.apache.org

2019-12-28T00:10:07+00:00 Life of a Librarian: A Distant Reader Field Trip to Bloomington http://sites.nd.edu/emorgan/2019/12/bloomington/Yesterday I was in Bloomington (Indiana) for a Distant Reader field trip.

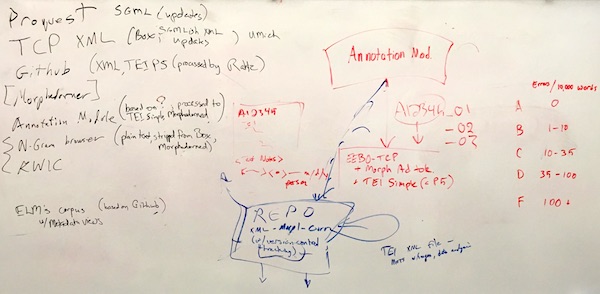

More specifically, I met with Marlon Pierce and Team XSEDE to talk about Distant Reader next steps. We discussed the possibility of additional grant opportunities, possible ways to exploit the Airivata/Django front-end, and Distant Reader embellishments such as:

Along the way Marlon & I visited the data center where I actually laid hands on the Reader. We also visited John Walsh of the HathiTrust Research Center where I did a two-fold show & tell: 1) downloading HathiTrust plain text files as well as PDF documents using htid2books, and 2) the Distant Reader, of course. As a bonus, there was cool mobile hanging from the ceiling of Luddy Hall.

“A good time was had by all.”

The Distant Reader is a tool for reading. [1]

Wall Paper by Eric

The Distant Reader empowers one to use & understand large amounts of textual information both quickly & easily. For example, the Distant Reader can consume the entire issue of a scholarly journal, the complete works of a given author, or the content found at the other end of an arbitrarily long list of URLs. Thus, the Distant Reader is akin to a book’s table-of-contents or back-of-the-book index but at scale. It simplifies the process of identifying trends & anomalies in a corpus, and then it enables a person to further investigate those trends & anomalies.

The Distant Reader is designed to “read” everything from a single item to a corpus of thousand’s of items. It is intended for the undergraduate student who wants to read the whole of their course work in a given class, the graduate student who needs to read hundreds (thousands) of items for their thesis or dissertation, the scientist who wants to review the literature, or the humanist who wants to characterize a genre.

The Distant Reader takes five different forms of input:

Once the input is provided, the Distant Reader creates a cache — a collection of all the desired content. This is done via the input or by crawling the ‘Net. Once the cache is collected, each & every document is transformed into plain text, and along the way basic bibliographic information is extracted. The next step is analysis against the plain text. This includes rudimentary counts & tabulations of ngrams, the computation of readability scores & keywords, basic topic modeling, parts-of-speech & named entity extraction, summarization, and the creation of a semantic index. All of these analyses are manifested as tab-delimited files and distilled into a single relational database file. After the analysis is complete, two reports are generated: 1) a simple plain text file which is very tabular, and 2) a set of HTML files which are more narrative and graphical. Finally, everything that has been accumulated & generated is compressed into a single zip file for downloading. This zip file is affectionately called a “study carrel“. It is completely self-contained and includes all of the data necessary for more in-depth analysis.

The Distant Reader supplements the traditional reading process. It does this in the way of traditional reading apparatus (tables of content, back-of-book indexes, page numbers, etc), but it does it more specifically and at scale.

Put another way, the Distant Reader can answer a myriad of questions about individual items or the corpus as a whole. Such questions are not readily apparent through traditional reading. Examples include but are not limited to:

People who use the Distant Reader look at the reports it generates, and they often say, “That’s interesting!” This is because it highlights characteristics of the corpus which are not readily apparent. If you were asked what a particular corpus was about or what are the names of people mentioned in the corpus, then you might answer with a couple of sentences or a few names, but with the Distant Reader you would be able to be more thorough with your answer.

The questions outlined above are not necessarily apropos to every student, researcher, or scholar, but the answers to many of these questions will lead to other, more specific questions. Many of those questions can be answered directly or indirectly through further analysis of the structured data provided in the study carrel. For example, each & every feature of each & every sentence of each & every item in the corpus has been saved in a relational database file. By querying the database, the student can extract every sentence with a given word or matching a given grammer to answer a question such as “How was the king described before & after the civil war?” or “How did this paper’s influence change over time?”

A lot of natural language processing requires pre-processing, and the Distant Reader does this work automatically. For example, collections need to be created, and they need to be transformed into plain text. The text will then be evaluated in terms of parts-of-speech and named-entities. Analysis is then done on the results. This analysis may be as simple as the use of concordance or as complex as the application of machine learning. The Distant Reader “primes the pump” for this sort of work because all the raw data is already in the study carrel. The Distant Reader is not intended to be used alone. It is intended to be used in conjunction with other tools, everything from a plain text editor, to a spreadsheet, to database, to topic modelers, to classifiers, to visualization tools.

I don’t know about you, but now-a-days I can find plenty of scholarly & authoritative content. My problem is not one of discovery but instead one of comprehension. How do I make sense of all the content I find? The Distant Reader is intended to address this question by making observations against a corpus and providing tools for interpreting the results.

[1] Distant Reader – https://distantreader.org

2019-11-09T02:25:04+00:00 Life of a Librarian: Project Gutenberg and the Distant Reader http://sites.nd.edu/emorgan/2019/11/pg-dr/The venerable Project Gutenberg is perfect fodder for the Distant Reader, and this essay outlines how & why. (tl;dnr: Search my mirror of Project Gutenberg, save the result as a list of URLs, and feed them to the Distant Reader.)

Wall Paper by Eric

To date, Project Gutenberg is a corpus of more than 60,000 freely available transcribed ebooks. The texts are predominantly in English, but many languages are represented. Many academics look down on Project Gutenberg, probably because it is not as scholarly as they desire, or maybe because the provenance of the materials is in dispute. Despite these things, Project Gutenberg is a wonderful resource, especially for high school students, college students, or life-long learners. Moreover, its transcribed nature eliminates any problems of optical character recognition, such as one encounters with the HathiTrust. The content of Project Gutenberg is all but perfectly formatted for distant reading.

Unfortunately, the interface to Project Gutenberg is less than desirable; the index to Project Gutenberg is limited to author, title, and “category” values. The interface does not support free text searching, and there is limited support for fielded searching and Boolean logic. Similarly, the search results are not very interactive nor faceted. Nor is there any application programmer interface to the index. With so much “clean” data, so much more could be implemented. In order to demonstrate the power of distant reading, I endeavored to create a mirror of Project Gutenberg while enhancing the user interface.

To create a mirror of Project Gutenberg, I first downloaded a set of RDF files describing the collection. [2] I then wrote a suite of software which parses the RDF, updates a database of desired content, loops through the database, caches the content locally, indexes it, and provides a search interface to the index. [3, 4] The resulting interface is ill-documented but 100% functional. It supports free text searching, phrase searching, fielded searching (author, title, subject, classification code, language) and Boolean logic (using AND, OR, or NOT). Search results are faceted enabling the reader to refine their query sans a complicated query syntax. Because the cached content includes only English language materials, the index is only 33,000 items in size.

The Distant Reader is a tool for reading. It takes an arbitrary amount of unstructured data (text) as input, and it outputs sets of structured data for analysis — reading. Given a corpus of any size, the Distant Reader will analyze the corpus, and it will output a myriad of reports enabling you to use & understand the corpus. The Distant Reader is intended to supplement the traditional reading process. Project Gutenberg and the Distant Reader can be used hand-in-hand.

As described in a previous posting, the Distant Reader can take five different types of input. [5] One of those inputs is a file where each line in the file is a URL. My locally implemented mirror of Project Gutenberg enables the reader to search & browse in a manner similar to the canonical version of Project Gutenberg, but with two exceptions. First & foremost, once a search has been gone against my mirror, one of the resulting links is “only local URLs”. For example, below is an illustration of the query “love AND honor AND truth AND justice AND beauty”, and the “only local URLs” link is highlighted:

By selecting the “only local URLs”, a list of… URLs is returned, like this:

This list of URLs can then be saved as file, and any number of things can be done with the file. For example, there are Google Chrome extensions for the purposes of mass downloading. The file of URLs can be fed to command-line utilities (ie. curl or wget) also for the purposes of mass downloading. In fact, assuming the file of URLs is named love.txt, the following command will download the files in parallel and really fast:

cat love.txt | parallel wget

This same file of URLs can be used as input against the Distant Reader, and the result will be a “study carrel” where the whole corpus could be analyzed — read. For example, the Reader will extract all the nouns, verbs, and adjectives from the corpus. Thus you will be able to answer what and how questions. It will pull out named entities and enable you to answer who and where questions. The Reader will extract keywords and themes from the corpus, thus outlining the aboutness of your corpus. From the results of the Reader you will be set up for concordancing and machine learning (such as topic modeling or classification) thus enabling you to search for more narrow topics or “find more like this one”. The search for love, etc returned more than 8000 items. Just less than 500 of them were returned in the search result, and the Reader empowers you to read all 500 of them at one go.

Project Gutenberg is very useful resource because the content is: 1) free, and 2) transcribed. Mirroring Project Gutenberg is not difficult, and by doing so an interface to it can be enhanced. Project Gutenberg items are perfect items for reading & analysis by the Distant Reader. Search Project Gutenberg, save the results as a file, feed the file to the Reader and… read the results at scale.

† All puns are intended.

[1] Michael Hart in Roanoke (Indiana) – video: https://youtu.be/eeoBbSN9Esg; blog posting: http://infomotions.com/blog/2010/03/michael-hart-in-roanoke-indiana/

[2] The various Project Gutenberg feeds, including the RDF is located at https://www.gutenberg.org/wiki/Gutenberg:Feeds

[3] The suite of software to cache and index Project Gutenberg is available on GitHub at https://github.com/ericleasemorgan/gutenberg-index

[4] My full text index to the English language texts in Project Gutenberg is available at http://dh.crc.nd.edu/sandbox/gutenberg/cgi-bin/search.cgi

[5] The Distant Reader and its five different types of input – http://sites.nd.edu/emorgan/2019/10/dr-inputs/

2019-11-06T01:56:30+00:00 Life of a Librarian: OJS Toolbox http://sites.nd.edu/emorgan/2019/10/ojs-toolbox/Given a Open Journal System (OJS) root URL and an authorization token, cache all JSON files associated with the given OJS title, and optionally output rudimentary bibliographics in the form of a tab-separated value (TSV) stream. [0]

Wall Paper by Eric

The Toolbox is written in Bash. To cache the metadata, you will need to have additional software as part of your file system: curl and jq. [3, 4] Curl is used to interact with the API. Jq is used to read & parse the resulting JSON streams. When & if you want to transform the cached JSON files into rudimentary bibliographics, then you will also need to install GNU Parallel, a tool which makes parallel processing trivial. [5]

Besides the software, you will need three pieces of information. The first is the root URL of the OJS system/title you wish to use. This value will probably look something like this –> https://example.com/index.php/foo Ask the OJS systems administrator regarding the details. The second piece of information is an authorization token. If an “api secret” has been created by the local OJS systems administrator, then each person with an OJS account ought to have been granted a token. Again, ask the OJS systems administrator for details. The third piece of information is the name of a directory where your metadata will be cached. For the sake of an example, assume the necessary values are:

Once you have gotten this far, you can cache the totality of the issue metadata:

$ ./bin/harvest.sh https://example.com/index.php/foo xyzzy bar

More specifically, `harvest.sh` will create a directory called bar. It will then determine how many issues exist in the title foo. It will then harvest sets of issue data, parse each set into individual issue files, and save the result as JSON files in the bar directory. You now have a “database” containing all the bibliographic information of a given title

For my purposes, I need a TSV file with four columns: 1) author, 2) title, 3) date, and 4) url. Such is the purpose of `issues2tsv.sh` and `issue2tsv.sh`. The first script, `issues2tsv.sh`, takes a directory as input. It then outputs a simple header, finds all the JSON files in the given directory, and passes them along (in parallel) to `issue2tsv.sh` which does the actual work. Thus, to create my TSV file, I submit a command like this:

$ ./bin/issues2tsv.sh bar > ./bar.tsv

The resulting file (bar.tsv) looks something like this:

| author | title | date | url |

|---|---|---|---|

| Kilgour | The Catalog | 1972-09-01 | https://example.com/index.php/foo/article/download/5738/5119 |

| McGee | Two Designs | 1972-09-01 | https://example.com/index.php/foo/article/download/5739/5120 |

| Saracevic | Book Reviews | 1972-09-01 | https://example.com/index.php/foo/article/download/5740/5121 |

Give such a file, I can easily download the content of a given article, extract any of its plain text, perform various natural language processing tasks against it, text mine the whole, full text index the whole, apply various bits of machine learning against the whole, and in general, “read” the totality of the journal. See The Distant Reader for details. [6]

[0] OJS Toolbox – https://github.com/ericleasemorgan/ojs-toolbox

[1] OJS – https://pkp.sfu.ca/ojs/

[2] OJS API – https://docs.pkp.sfu.ca/dev/api/ojs/3.1

[3] curl – https://curl.haxx.se

[4] jq – https://stedolan.github.io/jq/

[5] GNU Parallel – https://www.gnu.org/software/parallel/

[6] Distant Reader – https://distantreader.org

The Distant Reader can take five different types of input, and this blog posting describes what they are.

Wall Paper by Eric

At the present time, the Reader can accept five different types of input, and they include:

Each of these different types of input are elaborated upon below.

The simplest form of input is a single file from your computer. This can be just about file available to you, but to make sense, the file needs to contain textual data. Thus, the file can be a Word document, a PDF file, an Excel spreadsheet, an HTML file, a plain text file, etc. A file in the form of an image will not work because it contains zero text. Also, not all PDF files are created equal. Some PDF files are only facsimiles of their originals. Such PDF files are merely sets of images concatenated together. In order for PDF files to be used as input, the PDF files need to have been “born digitally” or they need to have had optical character recognition previously applied against them. Most PDF files are born digitally nor do they suffer from being facsimiles.

A good set of use-cases for single file input is the whole of a book, a long report, or maybe a journal article. Submitting a single file to the Distant Reader is quick & easy, but the Reader is designed for analyzing larger rather than small corpora. Thus, supplying a single journal article to the Reader doesn’t make much sense; the use of the traditional reading process probably makes more sense for a single journal article.

The Distant Reader can take a single URL as input. Given a URL, the Reader will turn into a rudimentary Internet spider and build a corpus. More specifically, given a URL, the Reader will:

In short, given a URL, the Reader will cache the URL’s content, crawl the URL one level deep, cache the result, and stop caching.

Like the single file approach, submitting a URL to the Distant Reader is quick & easy, but there are a number of caveats. First of all, the Reader does not come with very many permissions, and just because you are authorized to read the content at the other end of a URL does not mean the Reader has the same authorization. A lot of content on the Web resides behind paywalls and firewalls. The Reader can only cache 100% freely accessible content.

“Landing pages” and “splash pages” represent additional caveats. Many of the URLs passed around the ‘Net do not point to the content itself, but instead they point to ill-structured pages describing the content — metadata pages. Such pages may include things like authors, titles, and dates, but these things are not presented in a consistent nor computer-readable fashion; they are laid out with aesthetics or graphic design in mind. These pages do contain pointers to the content you want to read, but the content may be two or three more clicks away. Be wary of URLs pointing to landing pages or splash pages.

Another caveat to this approach is the existence of extraneous input due to navigation. Many Web pages include links for navigating around the site. They also include links to things like “contact us” and “about this site”. Again, the Reader is sort of stupid. If found, the Reader will crawl such links and include their content in the resulting corpus.

Despite these drawbacks there are number of excellent use-cases for single URL input. One of the best is Wikipedia articles. Feed the Reader a URL pointing to a Wikipedia article. The Reader will cache the article itself, and then extract all the URLs the article uses as citations. The Reader will then cache the content of the citations, and then stop caching.

Similarly, a URL pointing to an open access journal article will function just like the Wikipedia article, and this will be even more fruitful if the citations are in the form of freely accessible URLs. Better yet, consider pointing the Reader to the root of an open access journal issue. If the site is not overly full of navigation links, and if the URLs to the content itself are not buried, then the whole of the issue will be harvested and analyzed.

Another good use-case is the home page of some sort of institution or organization. Want to know about Apple Computer, the White House, a conference, or a particular department of a university? Feed the root URL of any of these things to the Reader, and you will learn something. At the very least, you will learn how the organization prioritizes its public face. If things are more transparent than not, then you might be able to glean the names and addresses of the people in the organization, the public policies of the organization, or the breadth & depth of the organization.

Yet another excellent use-case includes blogs. Blogs often contain content at their root. Navigations links abound, but more often than not the navigation links point to more content. If the blog is well-designed, then the Reader may be able to create a corpus from the whole thing, and you can “read” it in one go.

The third type of input is a list of URLs. The list is expected to be manifested as a plain text file, and each line in the file is a URL. Use whatever application you desire to build the list, but save the result as a .txt file, and you will probably have a plain text file.‡

Caveats? Like the single URL approach, the list of URLs must point to freely available content, and pointing to landing pages or splash pages is probably to be avoided. Unlike the single URL approach, the URLs in the list will not be used as starting points for Web crawling. Thus, if the list contains ten items, then ten items will be cached for analysis.

Another caveat is the actual process of creating the list; I have learned that is actually quite difficult to create lists of URLs. Copying & pasting gets old quickly. Navigating a site and right-clicking on URLs is tedious. While search engines & indexes often provide some sort of output in list format, the lists are poorly structured and not readily amenable to URL extraction. On the other hand, there are more than a few URL extraction tools. I use a Google Chrome extension called Link Grabber. [1] Install Link Grabber. Use Chrome to visit a site. Click the Link Grabber button, and all the links in the document will be revealed. Copy the links and paste them into a document. Repeat until you get tired. Sort and peruse the list of links. Remove the ones you don’t want. Save the result as a plain text file.‡ Feed the result to the Reader.

Despite these caveats, the list of URLs approach is enormously scalable; the list of URLs approach is the most scalable input option. Given a list of five or six items, the Reader will do quite well, but the Reader will operate just as well if the list contains dozens, hundreds, or even thousands of URLs. Imagine reading the complete works of your favorite author or the complete run of an electronic journal. Such is more than possible with the Distant Reader.‡

The Distant Reader can take a zip file as input. Create a folder/directory on your computer. Copy just about any file into the folder/directory. Compress the file into a .zip file. Submit the result to the Reader.

Like the other approaches, there are a few caveats. First of all, the Reader is not able to accept .zip files whose size is greater than 64 megabytes. While we do it all the time, the World Wide Web was not really designed to push around files of any great size, and 64 megabytes is/was considered plenty. Besides, you will be surprised how many files can fit in a 64 megabyte file.

Second, the computer gods never intended file names to contain things other than simple Romanesque letters and a few rudimentary characters. Now-a-days our file names contain spaces, quote marks, apostrophes, question marks, back slashes, forward slashes, colons, commas, etc. Moreover, file names might be 64 characters long or longer! While every effort as been made to accomodate file names with such characters, your milage may vary. Instead, consider using file names which are shorter, simpler, and have some sort of structure. An example might be first word of author’s last name, first meaningful word of title, year (optional), and extension. Herman Melville’s Moby Dick might thus be named melville-moby.txt. In the end the Reader will be less confused, and you will be more able to find things on your computer.

There are a few advantages to the zip file approach. First, you can circumvent authorization restrictions; you can put licensed content into your zip files and it will be analyzed just like any other content. Second, the zip file approach affords you the opportunity to pre-process your data. For example, suppose you have downloaded a set of PDF files, and each page includes some sort of header or footer. You could transform each of these PDF files into plain text, use some sort of find/replace function to remove the headers & footers. Save the result, zip it up, and submit it to the Reader. The resulting analysis will be more accurate.

There are many use-cases for the zip file approach. Masters and Ph.D students are expected to read large amounts of material. Save all those things into a folder, zip them up, and feed them to the Reader. You have been given a set of slide decks from a conference. Zip them up and feed them to the Reader. A student is expected to read many different things for History 101. Download them all, put them in a folder, zip them up, and submit them to the Distant Reader. You have written many things but they are not on the Web. Copy them to a folder, zip them up, and “read” them with the… Reader.

The final form of input is a zip file with a companion comma-separated value (CSV) file — a metadata file.

As the size of your corpus increases, so does the need for context. This context can often be manifested as metadata (authors, titles, dates, subject, genre, formats, etc.). For example, you might want to compare & contrast who wrote what. You will probably want to observe themes over space & time. You might want to see how things differ between different types of documents. To do this sort of analysis you will need to know metadata regarding your corpus.

As outlined above, the Distant Reader first creates a cache of content — a corpus. This is the raw data. In order to do any analysis against the corpus, the corpus must be transformed into plain text. A program called Tika is used to do this work. [2] Not only does Tika transform just about any file into plain text, but it also does its best to extract metadata. Depending on many factors, this metadata may include names of authors, titles of documents, dates of creation, number of pages, MIME-type, language, etc. Unfortunately, more often than not, this metadata extraction process fails and the metadata is inaccurate, incomplete, or simply non-existent.

This is where the CSV file comes in; by including a CSV file named “metadata.csv” in the .zip file, the Distant Reader will be able to provide meaningful context. In turn, you will be able to make more informed observations, and thus your analysis will be more thorough. Here’s how:

The zip file with a companion CSV file has all the strengths & weakness of the plain o’ zip file, but it adds some more. On the weakness side, creating a CSV file can be both tedious and daunting. On the other hand, many search engines & index export lists with author, title, and data metadata. One can use these lists as the starting point for the CSV file.♱ On the strength side, the addition of the CSV metadata file makes the Distant Reader’s output immeasurably more useful, and it leads the way to additional compare & contrast opportunities.

To date, the Distant Reader takes five different types of input. Each type has its own set of strengths & weaknesses:

Happy reading!

‡ Distant Reader Bounty #1: To date, I have only tested plain text files using line-feed characters as delimiters, such are the format of plain text files in the Linux and Macintosh worlds. I will pay $10 to the first person who creates a plain text file of URLs delimited by carriage-return/line-feed characters (the format of Windows-based text files) and who demonstrates that such files break the Reader. “On you mark. Get set. Go!”

‡ Distant Reader Bounty #2: I will pay $20 to the first person who creates a list of 2,000 URLs and feeds it to the Reader.

♱ Distant Reader Bounty #3: I will pay $30 to the first person who writes a cross-platform application/script which successfully transforms a Zotero bibliography into a Distant Reader CSV metadata file.

[1] Link Grabber – http://bit.ly/2mgTKsp

[2] Tika – http://tika.apache.org

2019-10-19T00:29:14+00:00 Life of a Librarian: Invitation to hack the Distant Reader http://sites.nd.edu/emorgan/2019/06/hackathon/We invite you to write a cool hack enabling students & scholars to “read” an arbitrarily large corpus of textual materials.

A website called The Distant Reader takes an arbitrary number of files or links to files as input. [1] The Reader then amasses the files locally, transforms them into plain text files, and performs quite a bit of natural language processing against them. [2] The result — the the form of a file system — is a set of operating system independent indexes which point to individual files from the input. [3] Put another way, each input file is indexed in a number of ways, and therefore accessible by any one or combination of the following attributes:

All of things listed above are saved as plain text files, but they have also been reduced to an SQLite database (./etc/reader.db), which is also distributed with the file system.

Your mission, if you choose to accept it, is to write a cool hack against the Distant Reader’s output. By doing so, you will be enabling people to increase their comprehension of the given files. Here is a list of possible hacks:

In order for you to do your good work, you will need some Distant Reader output. Here are pointers to some such stuff:

With the exception of only a few files (./etc/reader.db, ./etc/reader.vec, and ./cache/*), all of the files in the Distant Reader’s output are plain text files. More specifically, they are either unstructured data files or delimited files. Despite any file’s extension, the vast majority of the files can be read with your favorite text editor, spreadsheet, or database application. To read the database file (./etc/reader.db), you will need an SQLite application. The files in the adr, bib, ent, pos, urls, or wrd directories are all tab delimited files. A program called OpenRefine is a WONDERFUL tool for reading and analyzing tab delimited files. [9] In fact, a whole lot can be learned through the skillful use of OpenRefine against the tab delimited files.

[1] The home page of the Distant Reader is https://distantreader.org

[2] All of the code doing this processing is available on GitHub. See https://github.com/ericleasemorgan/reader

[3] This file system is affectionately known as a “study carrel”.

[4] A easy-to-use library for creating such vectors is a part of the Scikit Learn suite of software. See http://bit.ly/2F5EoxA

[5] The algorithm is described at https://en.wikipedia.org/wiki/Cosine_similarity, and a SciKit Learn module is available at http://bit.ly/2IaYcS3

[6] The name of the library is called sql.js and it is available at https://github.com/kripken/sql.js/

[7] The Levenshtein distance is described here — https://en.wikipedia.org/wiki/Levenshtein_distance, and various libraries doing the good work are outlined at http://bit.ly/2F30roM

[8] Yet another SciKit Learn module may be of use here — http://bit.ly/2F5o2oS

[9] OpenRefine eats delimited files for lunch. See http://openrefine.org

2019-06-13T01:27:51+00:00 Life of a Librarian: Fantastic Futures: My take-aways http://sites.nd.edu/emorgan/2018/12/fantastic-futures/

This is the briefest of take-aways from my attendance at Fantastic Futures, a conference on artificial intelligence (AI) in libraries. [1] From the conference announcement introduction:

This is the briefest of take-aways from my attendance at Fantastic Futures, a conference on artificial intelligence (AI) in libraries. [1] From the conference announcement introduction:

The Fantastic futures-conferences, which takes place in Oslo december 5th 2018, is a collaboration between the National Library of Norway and Stanford University Libraries, and was initiated by the National Librarian at the National Library of Norway, Aslak Sira Myhre and University Librarian at Stanford University Libraries, Michael Keller.

First of all, I had the opportunity to attend and participate in a pre-conference workshop. Facilitated by Nicole Coleman (Stanford University) and Svein Brygfjeld (National Library of Norway), the workshop’s primary purpose was to ask questions about AI in libraries, and to build community. To those ends the two dozen or so of us were divided into groups where we discussed what a few AI systems might look like. I was in a group discussing the possibilities of reading massive amounts of text and/or refining information retrieval based on reader profiles. In the end our group thought such things were feasible, and we outlined how they might be accomplished. Other groups discussed things such as metadata creation and collection development. Towards the end of the day we brainstormed next steps, and at the very least try to use the ai4lib mailing list to a greater degree. [2]

The next day, the first real day of the conference, was attended by more than a couple hundred of people. Most were from Europe, obviously, but from my perspective about as many were librarians as non-librarians. There was an appearance by Nancy Pearl, who, as you may or may not know, is a Seattle Public Library librarian embodied as an action figure. [3] She was brought to the conference because the National Library of Norway’s AI system is named Nancy. A few notable quotes from some of the speakers, as least from my perspective, included:

The next day, the first real day of the conference, was attended by more than a couple hundred of people. Most were from Europe, obviously, but from my perspective about as many were librarians as non-librarians. There was an appearance by Nancy Pearl, who, as you may or may not know, is a Seattle Public Library librarian embodied as an action figure. [3] She was brought to the conference because the National Library of Norway’s AI system is named Nancy. A few notable quotes from some of the speakers, as least from my perspective, included:

The presenters generated lively discussion, and I believe the conference was a success by the vast majority of attendees. It is quite likely the conference will be repeated next year and be held at Stanford.

What are some of my take-aways? Hmmm:

It was an honor and a privilege to attend the pre-conference workshop and conference. I sincerely believe AI can be used in libraries, and the use can be effective. Putting AI into practice will take time, energy, & prioritization. How do this and simultaneously “keep the trains running” will be a challenge. On the other hand, AI in libraries can be seen as an opportunity to demonstrate the inherent worth of cultural heritage institutions. ai4lib++

P.S. Along the way I got to see some pretty cool stuff: Viking ships, a fort, “The Scream”, and a “winterfest”. I also got to experience sunset at 3:30 in the afternoon.

[1] Fantastic Futures – https://www.nb.no/artikler/fantastic-futures/

[2] ai4lib – https://groups.google.com/forum/#!forum/ai4lib

[3] action figure – https://www.amazon.com/Nancy-Pearl-Librarian-Action-Figure/dp/B0006FU9EG

2018-12-11T02:43:06+00:00 Life of a Librarian: marc2catalog http://sites.nd.edu/emorgan/2018/07/marc2catalog/Given a set of MARC records, output a set of library catalogs

This set of scripts will take a set of MARC data, parse it into a simple (rudimentary and SQLite) database, and then generate a report against the database in the form of plain text files — a set of “library catalogs & indexes”. These catalogs & indexes are intended to be printed, but they can also be used to support rudimentary search via one’s text editor. For extra credit, the programer could read the underlying database, feed the result to an indexer, and create an OPAC (online public access catalog).

The system requires a bit of infrastructure: 1) Bash, 2) Perl, 3) a Perl module named MARC::Batch, 4) the DBI driver for SQLite.

The whole MARC-to-catalog process can be run with a single command:

./bin/make-all.sh <marc> <name>

Where <marc> is the name of the MARC file, and <name> is a one-word moniker for the collection. The distribution comes with sample data, and therefore an example execution includes:

./bin/make-all.sh ./etc/morse.mrc morse

The result ought to be the creation of a .db file in the ./etc directory, a collections directory, and sub-directory of collections, and a set of plain text files in the later. The plain text files are intended to be printed or given away like candy to interested learners or scholars.

The code for marc2catalog ought to be available on GitHub.

2018-07-02T23:07:51+00:00 Mini-musings: Charting & graphing with Tableau Public http://infomotions.com/blog/2018/05/tableau/They say, “A picture is worth a thousand words”, and through use of something like Tableau this can become a reality in text mining.

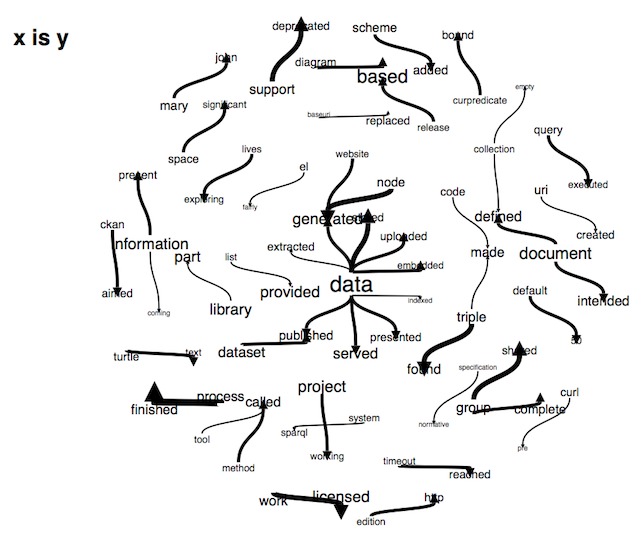

After extracting features from a text, you will have almost invariably created lists. Each of the items on the lists will be characterized with bits of context thus transforing the raw data into information. These lists will probably take the shape of matrices (sets of rows & columns), but other data structures exist as well, such as networked graphs. Once the data has been transformed into information, you will want to make sense of the information — turn the information into knowledge. Charting & graphing the data is one way to make that happen.

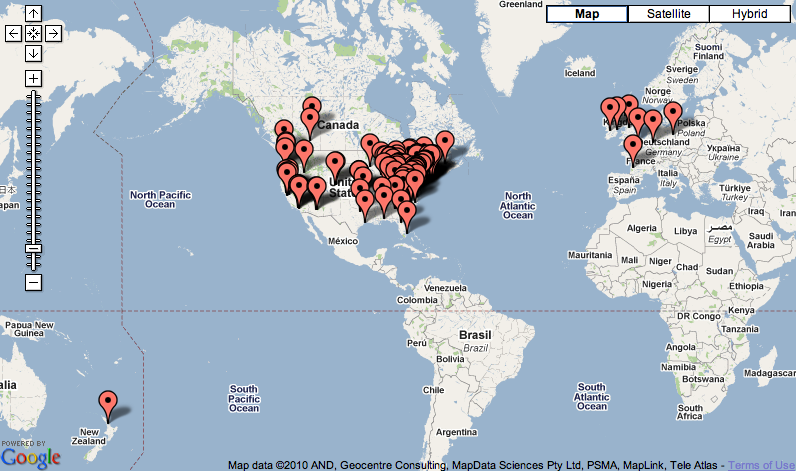

For example, the reader may have associated each word in a text with a part-of-speech, and then this association was applied across a corpus. The reader might then ask, “To what degree are words associated with each part-of-speech similar or different across items in the corpus? Do different items include similar or different personal pronouns, and therefore, are some documents more male, more female, or more gender neutral?” Alternatively, suppose the named entities have been extracted from items in a corpus, then the reader may want to know, “What countries, states, and/or cities are mentioned in the text/corpus, and to what degree? Are these texts ‘European’ in scope?”

A charting & graphing application like Tableau (or Tableau Public) can address these questions. [1] The first can be answered by enabling the reader to select one more more items from a corpus, select one or more parts-of-speech, counting & tabulating the intersected words, and displaying the result as a word cloud. The second question can be addressed similarly. Allow the reader to select items from a corpus, extract the names of places (countries, states, and cities), and plot the geographic coordinates on a global map. Once these visualizations are complete, they can be saved on the Web for others to use, for example:

|

|

Creating visualizations with Tableau (or Tableau Public) takes practice. Not only does the reader need to have structured data in hand, but one needs to be patient in the learning of the interface. To the author’s mind, the whole thing is reminiscent of the venerable HyperCard program from the 1980’s where one was presented with a number of “cards”, and programming interfaces were created by placing “objects” on them.

This workshop comes with two previously created Tableau workbooks located in the etc directory (word-clouds.twbx and maps.twbx). Describing the process to create them is beyond the scope of this workshop, but an outline follows:

Tableau is not really intended to be used against textual data/information; Tableau is more useful and more functional when applied to tabular numeric data. After all, the program is called… Tableau. This does not mean Tableau can not be exploited by the text miner. It just means it requires practice and an ability to articulate a question to be answered with the help of a visualization.

[1] Tableau Public – https://public.tableau.com/

2018-05-04T16:02:36+00:00 Mini-musings: Extracting parts-of-speech and named entities with Stanford tools http://infomotions.com/blog/2018/04/pos-ner/Extracting specific parts-of-speech as well as “named entities”, and then counting & tabulating them can be quite insightful.

Parts-of-speech include nouns, verbs, adjectives, adverbs, etc. Named entities are specific types of nouns, including but not limited to, the names of people, places, organizations, dates, times, money amounts, etc. By creating features out of parts-of-speech and/or named entities, the reader can answer questions such as:

There are a number of tools enabling the reader to extract parts-of-speech, including the venerable Brill part-of-speech tagger implemented in a number of programming languages, CLAWS, the Apache OpenNLP, and a specific part of the Stanford NLP suite of tools called the Stanford Log-linear Part-Of-Speech Tagger. [1] Named entities can be extracted with the Stanford Named Entity Recognizer (NER). [2] This workshop exploits the Standford tools.

The Stanford Log-linear Part-Of-Speech Tagger is written in Java, making it a bit difficult for most readers to use in the manner it was truly designed, the author included. Luckily, the distribution comes with a command-line interface allowing the reader to use the tagger sans any Java programing. Because any part-of-speech or named entity extraction application is the result of a machine learning process, it is necessary to use a previously created computer model. The Stanford tools comes quite a few models from which to choose. The command-line interface also enables the reader to specify different types of output: tagged, XML, tab-delimited, etc. Because of all these options, and because the whole thing uses Java “archives” (read programming libraries or modules), the command-line interface is daunting, to say the least.

After downloading the distribution, the reader ought to be able to change to the bin directory, and execute either one of the following commands:

$ stanford-postagger-gui.sh> stanford-postagger-gui.batThe result will be a little window prompting for a sentence. Upon entering a sentence, tagged output will result. This is a toy interface, but demonstrates things quite nicely.

The full-blown command-line interface is bit more complicated. From the command-line one can do either of the following, depending on the operating system:

$ stanford-postagger.sh models/english-left3words-distsim.tagger walden.txt> stanford-postagger.bat models\english-left3words-distsim.tagger walden.txtThe result will be a long stream of tagged sentences, which I find difficult to parse. Instead, I prefer the inline XML output, which is much more difficult to execute but much more readable. Try either:

$ java -cp stanford-postagger.jar: edu.stanford.nlp.tagger.maxent.MaxentTagger -model models/english-left3words-distsim.tagger -outputFormat inlineXML -outputFormatOptions lemmatize -textFile walden.txt> java -cp stanford-postagger.jar: edu.stanford.nlp.tagger.maxent.MaxentTagger -model models\english-left3words-distsim.tagger -outputFormat inlineXML -outputFormatOptions lemmatize -textFile walden.txtIn these cases, the result will be a long string of ill-formed XML. With a bit of massaging, this XML is much easier to parse with just about any compute programming language, believe it or not. The tagger can also be run in server mode, which makes batch processing a whole lot easier. The workshop’s distribution comes a server and client application for exploiting these capabilities, but, unfortunately, they won’t run on Windows computers unless some sort of Linux shell has been installed. Some people can issue the following command to launch the server from the workshop’s distribution:

$ ./bin/pos-server.sh

The reader can run the client like this:

$ ./bin/pos-client.pl walden.txt

The result will be a well-formed XML file, which can be redirected to a file, processed by another script converting it into a tab-delimited file, and finally saved to a second file for reading by a spreadsheet, database, or data analysis tool:

$ ./bin/pos-client.pl walden.txt > walden.pos; ./bin/pos2tab.pl walden.pos > walden.tsv

For the purposes of this workshop, the whole of the harvested data has been pre-processed with the Stanford Log-linear Part-Of-Speech Tagger. The result is been mirrored in the parts-of-speech folder/directory. The reader can open the files in the parts-of-speech folder/directory for analysis. For example, you might open them in OpenRefine and try to see what verbs appear most frequently in a given document. My guess the answer will be the lemmas “be” or “have”. The next set of most frequently used verb lemmas will probably be more indicative of the text.

The process of extrating features of name entities is very similar with the Stanford NER. The original Stanford NER distribution comes with a number of jar files, models, and configuration/parameter files. After downloading the distribution, the reader can run a little GUI application, import some text, and run NER. The result will look something like this:

The simple command-line interface takes a single file as input, and it outputs a stream of tagged sentences. For example:

$ ner.sh walden.txt> ner.bat walden.txtEach tag denotes an entity (i.e. the name of a person, the name of a place, the name of an organization, etc.). Like the result of all machine learning algorithms, the tags are not necessarily correct, but upon closer examination, most of them are pretty close. Like the POS Tagger, this workshop’s distribution comes with a set of scripts/programs that can make the Stanford NER tool locally available as a server. It also comes with a simple client to query the server. Like the workshop’s POS tool, the reader (with a Macintosh or Linux computer) can extract named entities all in two goes:

$ ./bin/pos-server.sh

$ ./bin/pos-client.pl walden.txt > walden.ner; ./bin/pos2tab.pl walden.ner > walden.tsv

Like the workshop’s pre-processed part-of-speech files, the workshop’s corpus has been pre-processed with the NER tool. The preprocessed files ought to be in a folder/directory named… named-entities. And like the parts-of-speech files, the “ner” files are tab-delimited text files readable by spreadsheets, databases, OpenRefine, etc. For example, you might open one of them in OpenRefine and see what names of people trend in a given text. Try to create a list of places (which is not always easy), export them to a file, and open them with Tabeau Public for the purposes of making a geographic map.

Extracting parts-of-speech and named entities straddles simple text mining and natural language processing. Simple text mining is often about counting & tabulating features (words) in a text. These features have little context sans proximity to other features. On the other hand, parts-of-speech and named entities denote specific types of things, namely specific types of nouns, verbs, adjectives, etc. While these thing do not necessarily denote meaning, they do provide more context than simple features. Extracting parts-of-speech and named entities is (more or less) a easy text mining task with more benefit than cost. Extracting parts-of-speech and named entities goes beyond the basics.

It is imperative to create plain text versions of corpus items.

Text mining can not be done without plain text data. This means HTML files need to be rid of markup. It means PDF files need to have been “born digitally” or they need to have been processed with optical character recognition (OCR), and then the underlying text needs to be extracted. Word processor files need to converted to plain text, and the result saved accordingly. The days of plain o’ ASCII text files need to be forgotten. Instead, the reader needs to embrace Unicode, and whenever possible, make sure characters in the text files are encoded as UTF-8. With UTF-8 encoding, one gets all of the nice accent marks so foreign to United States English, but one also gets all of the pretty emoticons increasingly sprinkling our day-to-day digital communications. Moreover, the data needs to be as “clean” as possible. When it comes to OCR, do not fret too much. Given the large amounts of data the reader will process, “bad” OCR (OCR with less than 85% accuracy) can still be quite effective.

Converting harvested data into plain text used to be laborious as well as painful, but then a Java application called Apache Tika came on the scene. [1] Tika comes in two flavors: application and server. The application version can take a single file as input, and it can output metadata as well as any underlying text. The application can also work in batch mode taking a directory as input and saving the results to a second directory. Tika’s server version is much more expressive, more powerful, and very HTTP-like, but it requires more “under the hood” knowledge to exploit to its fullest potential.

For the sake of this workshop, versions of the Tika application and Tika server are included in the distribution, and they have been saved in the lib directory with the names tika-desktop.jar and tika-server.jar. The reader can run the desktop/GUI version of the Tika application by merely double-clicking on it. The result will be a dialog box.

|

|

Drag a PDF (or just about any) file on to the window, and Tika will extract the underlying text. To use the command-line interface, something like this could be run to output the help text:

$ java -jar ./lib/tika-desktop.jar --help> java -jar .\lib\tika-desktop.jar --helpAnd then something like these commands to process a single file or a whole directory of files:

$ java -jar ./lib/tika-desktop.jar -t <filename>$ java -jar ./lib/tika-desktop.jar -t -i <input directory> -o <output directory>> java -jar .\lib\tika-desktop.jar -t <filename>> java -jar .\lib\tika-desktop.jar -t -i <input directory> -o <output directory>Try transforming a few files individually as well as in batch. What does the output look like? To what degree is it readable? To what degree has the formatting been lost? Text mining does not take formatting into account, so there is no huge loss in this regard.

Without some sort of scripting, the use of Tika to convert harvested data into plain text can still be tedious. Consequently, the whole of the workshop’s harvested data has been pre-processed with a set of Perl and bash scripts (which probably won’t work on Windows computers unless some sort of Linux shell has been installed):

$ ./bin/tika-server.sh – runs Tika in server mode on TCP port 8080, and waits patiently for incoming connections$ ./bin/tika-client.pl – takes a file as input, sends it to the server, and returns the plain text while handling the HTTP magic in the middle$ ./bin/file2txt.sh – a front-end to the second script taking a file and directory name as input, transforming the file into plain text, and saving the result with the same name but in the given directory and with a .txt extensionThe entirety of the harvested data has been transformed into plain text for the purposes of this workshop. (“Well, almost all.”) The result has been saved in the folder/directory named “corpus”. Peruse the corpus directory. Compare & contrast its contents with the contents of the harvest directory. Can you find any ommisions, and if so, then can you guess why/how they occurred?

Topic modeling is an unsupervised machine learning process. It is used to create clusters (read “subsets”) of documents, and each cluster is characterized by sets of one or more words. Topic modeling is good at answering questions like, “If I were to describe this collection of documents in a single word, then what might that word be? How about two?” or make statements like, “Once I identify clusters of documents of interest, allow me to read/analyze those documents in greater detail.” Topic modeling can also be used for keyword (“subject”) assignment; topics can be identified and then documents can be indexed using those terms. In order for a topic modeling process to work, a set of documents first needs to be assembled. The topic modeler then, at the very least, takes an integer as input, which denotes the number of topics desired. All other possible inputs can be assumed, such as use of a stop word list or denoting the number of time the topic modeler ought to internally run before it “thinks” it has come the best conclusion.

MALLET is the grand daddy of topic modeling tools, and it supports other functions such as text classification and parsing. [1] It is essentially a set of Java-based libraries/modules designed to be incorporated into Java programs or executed from the command line.

A subset of MALLET’s functionality has been implemented in a program called topic-modeling-tool, and the tool bills itself as “A GUI for MALLET’s implementation of LDA.” [2] Topic-modeling-tool provides an easy way to read what possible themes exist in a set of documents or how the documents might be classified. It does this by creating topics, displaying the results, and saving the data used to create the results for future use. Here’s one way:

The result will be a set of HTML, CSS, and CSV files saved in the output location. The “answer” can also be read in the tool’s console.

A more specific example is in order. Here’s how to answer the question, “If I were describe this corpus in a single word, then what might that one word be?”:

|

|

What one word can be used to describe your collection?

Iterate the modeling process by slowly increasing the number of desired topics and number of topic words. Personally, I find it interesting to implement a matrix of topics to words. For example, start with one topic and one word. Next, denote two topics with two words. Third, specify three topics with three words. Continue the process until the sets of words (“topics”) seem to make intuitive sense. After a while you may observe clear semantic distinctions between each topic as well as commonalities between each of the topic words. Distinctions and commonalities may include genders, places, names, themes, numbers, OCR “mistakes”, etc.

The venerable Python Natural Language Toolkit (NLTK) is well worth the time of anybody who wants to do text mining more programmatically. [0]

For much of my career, Perl has been the language of choice when it came to processing text, but in the recent past it seems to have fallen out of favor. I really don’t know why. Maybe it is because so many other computer languages have some into existence in the past couple of decades: Java, PHP, Python, R, Ruby, Javascript, etc. Perl is more than capable of doing the necessary work. Perl is well-supported, and there are a myriad of supporting tools/libraries for interfacing with databases, indexers, TCP networks, data structures, etc. On the other hand, few people are being introduced to Perl; people are being introduced to Python and R instead. Consequently, the Perl community is shrinking, and the communities for other languages is growing. Writing something in a “dead” language is not very intelligent, but that may be over-stating the case. On the other hand, I’m not going to be able to communicate with very many people if I speak Latin and everybody else is speaking French, Spanish, or German. It behooves the reader to write software in a language apropos to the task as well as a langage used by many others.

Python is a good choice for text mining and natural language processing. The Python NLTK provides functionality akin to much of what has been outlined in this workshop, but it goes much further. More specifically, it interaces with WordNet, a sort of thesaurus on steroids. It interfaces with MALLET, the Java-based classification & topic modeling tool. It is very well-supported and continues to be maintained. Moreover, Python is mature in & of itself. There are a host of Python “distributions/frameworks”. There are any number of supporting libraries/modules for interfacing with the Web, databases & indexes, the local file system, etc. Even more importantly for text mining (and natural language processing) techniques, Python is supported by a set of robust machine learning libraries/modules called scikit-learn. If the reader wants to write text mining or natural language processing applications, then Python is really the way to go.

In the etc directory of this workshop’s distribution is a “Jupyter Notebook” named “An introduction to the NLTK.ipynb”. [1] Notebooks are sort of interactive Python interfaces. After installing Jupyter, the reader ought to be able to run the Notebook. This specific Notebook introduces the use of the NLTK. It walks you through the processes of reading a plain text file, parsing the file into words (“features”). Normalizing the words. Counting & tabulating the results. Graphically illustrating the results. Finding co-occurring words, words with similar meanings, and words in context. It also dabbles a bit into parts-of-speech and named entity extraction.

The heart of the Notebook’s code follows. Given a sane Python intallation, one can run this proram by saving it with a name like introduction.py, saving a file named walden.txt in the same directory, changing to the given directory, and then running the following command:

python introduction.py

The result ought to be a number of textual outputs in the terminal window as well as a few graphics.

Errors may occur, probably because other Python libraries/modules have not been installed. Follow the error messages’ instructions, and try again. Remember, “Your milage may vary.”

# configure; using an absolute path, define the location of a plain text file for analysis

FILE = 'walden.txt'

# import / require the use of the Toolkit

from nltk import *

# slurp up the given file; display the result

handle = open( FILE, 'r')

data = handle.read()

print( data )

# tokenize the data into features (words); display them

features = word_tokenize( data )

print( features )

# normalize the features to lower case and exclude punctuation

features = [ feature for feature in features if feature.isalpha() ]

features = [ feature.lower() for feature in features ]

print( features )

# create a list of (English) stopwords, and then remove them from the features

from nltk.corpus import stopwords

stopwords = stopwords.words( 'english' )

features = [ feature for feature in features if feature not in stopwords ]

# count & tabulate the features, and then plot the results -- season to taste

frequencies = FreqDist( features )

plot = frequencies.plot( 10 )

# create a list of unique words (hapaxes); display them

hapaxes = frequencies.hapaxes()

print( hapaxes )

# count & tabulate ngrams from the features -- season to taste; display some

ngrams = ngrams( features, 2 )

frequencies = FreqDist( ngrams )

frequencies.most_common( 10 )

# create a list each token's length, and plot the result; How many "long" words are there?

lengths = [ len( feature ) for feature in features ]

plot = FreqDist( lengths ).plot( 10 )

# initialize a stemmer, stem the features, count & tabulate, and output

from nltk.stem import PorterStemmer

stemmer = PorterStemmer()

stems = [ stemmer.stem( feature ) for feature in features ]

frequencies = FreqDist( stems )

frequencies.most_common( 10 )

# re-create the features and create a NLTK Text object, so other cool things can be done

features = word_tokenize( data )

text = Text( features )

# count & tabulate, again; list a given word -- season to taste

frequencies = FreqDist( text )

print( frequencies[ 'love' ] )

# do keyword-in-context searching against the text (concordancing)

print( text.concordance( 'love' ) )

# create a dispersion plot of given words

plot = text.dispersion_plot( [ 'love', 'war', 'man', 'god' ] )

# output the "most significant" bigrams, considering surrounding words (size of window) -- season to taste

text.collocations( num=10, window_size=4 )

# given a set of words, what words are nearby

text.common_contexts( [ 'love', 'war', 'man', 'god' ] )

# list the words (features) most associated with the given word

text.similar( 'love' )

# create a list of sentences, and display one -- season to taste

sentences = sent_tokenize( data )

sentence = sentences[ 14 ]

print( sentence )

# tokenize the sentence and parse it into parts-of-speech, all in one go

sentence = pos_tag( word_tokenize( sentence ) )

print( sentence )

# extract named enities from a sentence, and print the results

entities = ne_chunk( sentence )

print( entities )

# done

quit()

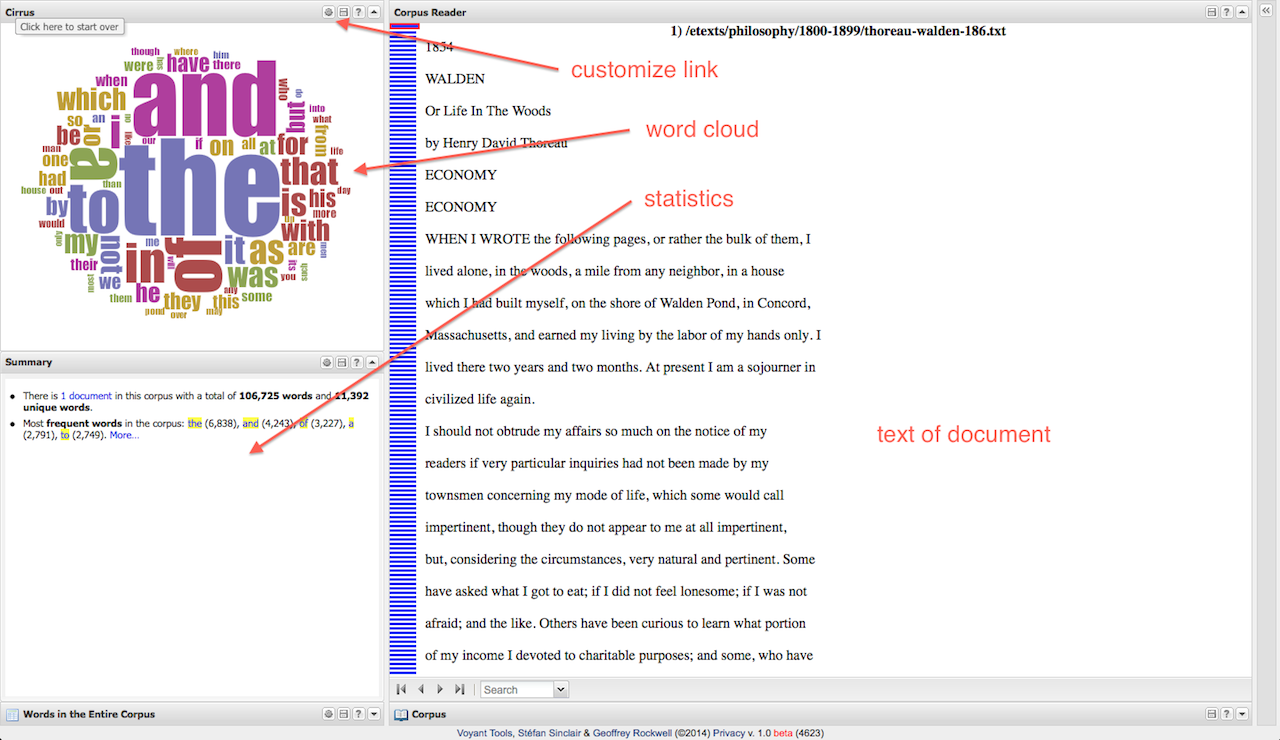

Voyant Tools is often the first go-to tool used by either: 1) new students of text mining and the digital humanities, or 2) people who know what kind of visualization they need/want. [1] Voyant Tools is also one of the longest supported tools described in this bootcamp.