Review, Revise, and (re)Release: Updating an Information Literacy Tutorial to Embed a Science Information Life Cycle

Jeffra Diane Bussmann

STEM/Web Librarian

California State University, East Bay

Hayward, California

Jeffra.Bussmann@csueastbay.edu

Caitlin E. Plovnick

Reference & Instruction Librarian

University of California, Irvine

Irvine, California

cplovnic@uci.edu

Abstract

In 2008, University of California, Irvine (UCI) Libraries launched their first Find Science Information online tutorial. It was an innovative web-based tool, containing not only informative content but also interactive activities, embedded hyperlinked resources, and reflective quizzes, all designed primarily to educate undergraduate science students on the basic components of finding science information. From its inception, the UCI Libraries intended to update the tutorial, ensuring its continued relevance and currency. This article covers the authors' process in evaluating and revising the tutorial, including usability testing and the changes made to the tutorial based on findings. One particular discovery was a need to contextualize the tutorial by more thoroughly addressing the scholarly communication system of the sciences, which led to its being restructured around a science information life cycle model. A literature review and comparison images from the original and revised tutorial are included.

Introduction

Convincing students of the importance of information literacy (IL) is a challenge that librarians usually address through classes, workshops, one-shot sessions, and online tutorials. We study theories and methods for activating this transformative knowledge in face-to-face and online environments. A compounding IL challenge is its integration in the Science, Technology, Engineering and Mathematics (STEM) disciplines. IL instruction in the STEM higher education experience has rarely been identified as immediately relevant, especially since science students' work does not usually involve writing papers (Scaramozzino 2010). And yet, a competent science student must engage the scholarly ecosystem by evaluating scientific information critically and using it appropriately in order to produce new scientific knowledge. Recently, the Association of College and Research Libraries Working Group on Intersections of Scholarly Communication and Information Literacy (2013) introduced a white paper that defines scholarly communication as "the systems by which the results of scholarship are created, registered, evaluated, disseminated, preserved, and reshaped into new scholarship" (p. 4). The revised science information online tutorial discussed in this article addresses these issues of online learning, science information literacy and scholarly communication, and provides one possible solution to these challenges.

To begin the revision process, we considered a series of questions to evaluate the original purpose of the tutorial, the needs of the tutorial users, and how the tutorial currently meets those needs. These questions developed into the following:

- Do we revise or retire the existing tutorial?

- What is out of date in terms of design, navigation, content, and technology?

- How have other libraries revised tutorials, and what can we learn from them?

- How has the tutorial audience changed, if at all?

- What is most useful in the current tutorial? What is least useful?

- What hinders the success of the tutorial as an IL teaching tool?

- What can be done to facilitate greater comprehension of the tutorial's learning outcomes?

- Which revisions are attainable within the scope of the project?

- Finally and most importantly, why should students use the tutorial? Why is it immediately valuable to their academic lives, as well as their lifelong learning experience?

Background

- Do we revise or retire the existing tutorial?

- What is out of date in terms of design, navigation, content, and technology?

In 2008, University of California, Irvine (UCI) Libraries launched their first Find Science Information online tutorial. It was an innovative web-based tool, containing not only informative content but also interactive activities, embedded hyperlinked resources, and reflective quizzes, all designed primarily to educate undergraduate science students on the basic components of finding science information. Significantly, the tutorial also incorporated the Information Literacy Competency Standards for Science and Engineering/Technology from the American Library Association (ALA)/Association of College and Research Libraries (ACRL)/Science and Technology Section (STS) Task Force on Information Literacy for Science and Technology. An additional component was added later that enabled users to receive a certificate of completion at the end of the tutorial. Scaramozzino (2008) wrote about the creation of the original Find Science Information tutorial and the initial needs that informed its design and structure.

The tutorial was originally designed in 2008 with the mindset that it would require updating when needs and technological capabilities changed. That time came in 2011. We determined that value still remained in offering a science information tutorial and that the 2008 tutorial still contained relevant, useful content, but that design, technology, navigation and content all required updating. The Libraries' web site had been redesigned, and newer tutorials used a common aesthetic design missing from the Find Science Information tutorial. In the intervening three years, there had also been changes in web site architecture technology. For example, instructional designers learned that programming tutorials in Flash was no longer desirable as it is incompatible with mobile and tablet devices. As web sites, including web-based tutorials, have evolved, our navigational use of them has changed to reflect new technology and usability knowledge. Finally, since the tutorial content was first developed, there have been changes in academic discourse and scholarship that we wished to address.

We turned to UCI's Begin Research tutorial as an initial guide for our project. Originally developed as an introductory IL tutorial for undergraduates, the tutorial was later adapted by a team of librarians to share across the ten University of California campuses and beyond. The tutorial's aesthetic design, which matches the UC Libraries' web site, provided us with a visual model, even as we recognized that it had been adapted to reflect the needs of different institutions, as explored by Palmer et al. (2012). As such, we needed to plan a tutorial that would both meet the specific needs of UCI undergraduate students, while considering the universal needs of science information users and seekers.

Literature Review

- How have other libraries revised tutorials, and what can we learn from them?

In the extant literature, very little discussion can be found on how to use an online tutorial to teach students about the specifics of science information in the context of information literacy. One article that does address this issue contains a content analysis of over 30 science information library tutorials in relation to good pedagogical practices (Li 2011). Not only does the author examine the science information tutorials in terms of how they align with the science and technology IL standards, she also specifically highlights UCI's Find Science Information tutorial. While she presents a positive review of that tutorial, she also offers valuable suggestions for its improvement. In particular, the Find Science Information tutorial successfully addresses the first four STS Information Literacy standards but lacks the inclusion of the fifth standard, which states that "The information literate student understands that information literacy is an ongoing process and an important component of lifelong learning and recognizes the need to keep current regarding new developments in his or her field" (as cited in Li on p. 15).

Similarly, we found little written on the holistic process of completing the revision of an existing tutorial, including integration with other existing tutorials and making it consistent with the larger library web site. Blummer and Kritskaya (2009) draw information from multiple case studies to outline best practices for online library tutorials, from creation to assessment. While their study focuses on developing tutorials from scratch, many of their recommendations are also relevant for those seeking to update or evaluate an existing tutorial. These include conducting a needs assessment, determining objectives, and verifying the appropriate integration of established standards, such as the ACRL Information Literacy Competency Standards, into tutorial content. They also recommend developing strategic partnerships to aid in the creation and development of the tutorial, such as with online learning system administrators and graphic designers. Other best practices consider the needs of individual users and include the employment of active learning strategies such as quizzes, clear navigation, and flexibility to allow for different learning styles.

One relevant project is the Purdue University librarians' assessment of their CORE (Comprehensive Online Research Education) information literacy tutorial, which sought out the perspective of tutorial users, specifically science students (Weiner et al. 2011). They administered a survey containing multiple-choice and open-ended questions to first-year students in biology and nursing classes. Librarians discovered consensus among students that certain material was superfluous while in other areas more information was needed. Their study focuses primarily on the process of surveying students, the results of that survey, and the authors' suggestions for future versions of the tutorial. The updates to the CORE tutorial itself are not included in their article.

Other examples of tutorial evaluation and revision involve the Texas Information Literacy Tutorial (TILT), an oft-cited and influential tutorial created by the University of Texas at Austin in the late 1990s. It has not only undergone several revisions in the last decade, but has also been used as a base model for tutorial adaptations by many other institutions. For example, Noe and Bishop (2005) measured the effectiveness of their revised tutorial at Auburn University with pre-tests and post-tests, and based their revision on user responses. At Wayne State University, Befus and Byrne (2011) also adapted TILT into their new re:Search tutorial and shared the results of a post-tutorial questionnaire. Most recently, Anderson and Mitchell (2012) delineate their process of taking their TILT-based tutorial and completing a major overhaul to place the newly revised content into a learning management system. None of these authors describes the detailed process of revising and updating the content from the original tutorial into their new tutorial.

In addition to surveys or pre- and post-tests, usability studies are an important way to evaluate online learning objects from a user's perspective in order to determine how well the object meets user needs and ascertain where difficulties may arise. Not much has been documented in the literature on the usability testing of online library tutorials; however, such testing of library web sites is more prevalent. Not surprisingly, successful online library tutorials make effective use of good web site design principles (Reece 2007). Early research on usability testing of an academic library's online presence focuses on ease of use (Valentine & Nolan 2002). Later recommendations include assessing the usefulness of content and how design facilitates better understanding of the library itself (Vaughn & Callicott 2003). Some libraries have used Jacob Nielsen's online resources to help set parameters for their usability testing, which involves the "talk-aloud method," i.e., where students work through various scenarios vocalizing their steps and thoughts (George 2005; King & Jannik 2005).

A study by Bury and Oud (2005) at the Wilfred Laurier University Library in Ontario takes into account the distinctions between a library web site and a tutorial. They noted that library web sites function as a gateway to multiple channels of information, while tutorials, which have specific learning goals, are experienced in a more linear fashion. They implemented inquiry-based testing, relying less on the observation of participants completing specific tasks and more on the solicitation of participants' feedback as they worked through each section of the tutorial.

Klare and Hobbs (2011) used an observation-based approach when they redesigned their library web presence at Wesleyan University. They met with students to review the web site via a usability test, but employed ethnographic techniques that relied on test participants guiding the conversation, as opposed to the traditional scripted encounter. "The goal is to attempt as much as possible to see the situation from the subject's point of view to understand how the subject approaches and construes the situation" (Klare & Hobbs 2011: 100). This free form method yields more substantive information than the traditional scripted encounter as it can also reveal users' natural behavior in regards to the web site.

User Study - Their Perspective

- How has the tutorial audience changed, if at all?

- What is most useful in the current tutorial? What is least useful?

- What hinders the success of the tutorial as an IL teaching tool?

In order to determine the needs of our intended audience, undergraduate students at UCI, user testing was necessary. Earlier efforts to make small updates to UCI's Find Science Information tutorial had previously solicited student feedback by observing students as they progressed through the tutorial and recording their comments. Scaramozzino (2008) stressed the significance of doing this: "An important lesson learned in the process was to maintain a focus on the learning styles of the end users, the students, instead of the people providing the content, the librarians" (623).

As seen in the literature, there are many different ways to conduct user testing. Having participants perform a formal, structured series of tasks can uncover usability issues throughout the tutorial, while a more serendipitous observational approach can reveal important patterns in user behavior. In order to gather information on both the performance of the learning object being tested and the needs and habits of potential users, we employed a combination of these methods.

We created a script using the template provided by the usability.gov web site and modified it to our specifications (U.S. Department of Health and Human Services). The final script (see Appendix) includes:

- A neutral introduction

- Preliminary questions to determine relevant background information for each test participant

- A scenario that required participants to work through the tutorial while sharing their impressions and reasoning out loud

- A set of post-tutorial interview questions designed to give them the opportunity to provide more detailed feedback

We recruited six students, ranging from 2nd to 4th year, who worked as student assistants in the libraries to serve as test participants. While the ideal number of participants to catch most usability problems varies depending on the research consulted, this number has been shown to fall between four and eight (Nielsen & Landauer 1993; Rubin & Chisnell 2008). Only one student had interacted with a library tutorial previously. It was significant that half of the participants were science majors and half were not, because the tutorial is intended for use by all undergraduate students who are studying science, regardless of major. We wanted to see how students with a background in scientific studies would respond to the tutorial as well as those without regular or recent instruction in science concepts such as the scientific method.

We met with each student individually for approximately one hour. A computer station was set up for them to complete the tutorial while one or both of us sat nearby and took notes as students shared their reflections and experiences. It was important for us, as observers, to remain neutral and not suggest a preference for any particular outcome. We also made it clear that students should approach the tutorial as naturally as possible, skipping or spending time on individual sections as they would act in a normal, unobserved scenario. While we wanted to test usability for all parts of the tutorial, we also wanted to observe participants' natural response to it in terms of which parts they perceived as having value and which parts they were inclined to overlook. In this way, we followed the ethnographic model described by Klare and Hobbs (2011). Likewise, we had students control the conversation and set the pace for the majority of the testing session. Due to the linear structure and interactive components of the tutorial, students were essentially presented with a series of tasks to accomplish, but it was left up to them to decide which modules they would visit, which pages they would read, and which exercises they would complete.

In analyzing the results of the usability test, there were some notable areas where student responses agreed with one another:

- Nearly all of the participants were frustrated by animated text that appeared line by line. While this design was intended to slow readers down and not overwhelm them with too much text all at once, it annoyed test participants who were then inclined to skip the page rather than wait for the remaining text to load.

- Participants found navigation between modules confusing. Within the modules, individual pages ended with a "next" button, but at the end of a module, there was no similar button directing students where to go next.

- Students often missed activity directions in small gray/light blue font located below the activities at the bottom of the tutorial webpage, which caused confusion and frustration.

- Participants tended to skip over or fail to notice the information on the right-hand side of the screen and the question/answer boxes that required further clicking.

Other student responses varied greatly in terms of what each participant found useful or preferred to skip. Some students hurried through informational pages and focused primarily on interactive exercises and quizzes. Others skipped all activities and only showed interest in definitions and links. Regardless of what exactly they skipped, each student shared the inclination to filter out all but that which they deemed most important. This sense of what was important and what was extraneous differed from student to student based on existing knowledge and preferred learning styles, suggesting that information should be presented in multiple ways to enable all students to learn the material regardless of what they choose to skip.

When students saw a portion of information they recognized, they were inclined to assume they knew all the information on that page and skip it completely. For example, a section on ‘Books' was regularly skipped because students already "knew" what books were and assumed there would be no new information. However, students' performance on quizzes included in the tutorial suggested that they had not actually mastered that information. In order to ensure that students do not skip useful information, we realized that it would have to be presented differently.

Altogether, these findings showed us that students did not understand why they should care about anything besides the practical methods for finding science information. At the same time, they missed important connections between the various modules of the tutorial and how each impacts the finding of science information.

Science Information Life Cycle - The Framework

- What can be done to facilitate greater comprehension of the learning outcomes?

-

Why should students use the tutorial? Why is it immediately valuable to their academic lives, as well as their lifelong learning experience?

As we reviewed the usability test results, we noted that the students needed something more than updated navigation buttons to understand the process, or cycle, of science information. Initially, we proposed simply changing from the current three longer modules to five slightly shorter modules to facilitate the inclusion of some additional content. This adjustment would also allow the tutorial to be re-structured to match the design of the UCI Libraries' web site and the Begin Research web-based tutorial, both of which use tabs for each section of content. As we reflected on single-word titles to describe these modules and their intended content, we realized that the words linked together could illuminate the holistic process that science information undergoes through the modularized lessons. From this idea, we constructed the science information life cycle around five simple module titles: produce, share, organize, find, and use (Figure 1). The cycle presents an immediate, cohesive, overarching view of how the different modules relate to each other with science information at the center of the action. Huvila (2011) warns against IL instruction that focuses too much on the seeking and use of information, leaving out the process of producing and organizing information. The author also suggests that IL teaching should incorporate the whole information process in which used information can lead to newfound knowledge (or the creation/production of new information), thereby beginning the information process over again. The science information life cycle fits right into this holistic approach by addressing the production of science information, the scholarly communication methods used by scientists, and how these influence the ways science information is organized. The science information life cycle is also in accordance with the ACRL Working Group on Intersections of Scholarly Communication and Information Literacy's (2013) assessment of student learning needs.

The science information life cycle also addresses how context can facilitate student comprehension of content. Observed reactions in the usability testing indicated that students expected simply to learn how to find science information. When the tutorial delved into parts of the scholarly ecosystem surrounding science information, including how it comes to be, how it is communicated, and how it should be used appropriately, these lessons were unexpected and undesired. Students could not understand why they needed this additional information. The cycle is designed to illustrate that the whole process of science information affects how one looks for and finds information. In other words, understanding how science information is produced, shared, and organized is integral to understanding how to find and use it. Thus, the science information life cycle became both the content we aimed to teach as well as the framework used for teaching it.

Figure 1: The home page for the 2012 Find Science Information tutorial, which includes the Science Information Life Cycle

Another critical role that the science information life cycle takes on is that of a compass, providing a stationary point to mark movement in and progress through the tutorial. The homepage for the tutorial puts the science information life cycle front and center to orientate the user immediately. The cycle image continues to be present throughout the tutorial as a navigational marker. It is not hyperlinked, but functions as a visual indicator of where the student currently is located in the tutorial. Color themes are also used to reinforce location. This navigational style mirrors the libraries' web site, which has a similar functional use of color. In each module, the navigational page changers (now arrow heads) have been moved to the top right. Instructions for activities have been integrated into the page content. During the usability testing, we noted that when students reached the end of a module in the original tutorial, they were unsure where to go next. To address this weakness, the color of the ‘next' arrow on the final page of a module now matches the following module's color, confirming the next move and providing subtle navigational cues through the progress of the cycle. We found that it is extremely useful to work from a core structural concept like the science information life cycle because it ties together issues of design, navigation, and content, suggesting elegant solutions in regard to each.

Further Revisions … Within Reason

- Which revisions are attainable within the scope of the project?

Tutorial revisions are potentially costly and time consuming. It is important to set boundaries and consider how many of the desired changes will fit into the limits of the project. In addition to the new, life-cycle based structure of the tutorial, we considered adding a graphic narrative component as a supplemental means to convey and contextualize the new concepts, but this was ultimately deemed too costly and out of scope. Many other changes proved to be both easy and sensible to implement. We found it useful to look at the revisions in terms of design, content, navigation, and technology, with the life-cycle framework grounding and informing many of our decisions.

Design: In addition to the previously described design elements relating to the science information life cycle and library web page, we sought to reinforce the sense of personal context in the tutorial by including images of both scientists and students. Some of these images were ordered from iStockphotos.com, while others were campus photos that represent UCI students' own experiences with scientific research (provided by UCI's Office of Strategic Communications). Authentic images from UCI help to personalize the tutorial without alienating users from other locations. We favored images that matched each section by color or content, to further reinforce the presented concepts.

By selecting visuals that worked with the science information life cycle and the style and structure of UCI libraries' web site, and by using local resources such as campus photos, we ensured that the revised tutorial design is attractive to, functional for, and reflective of both the institution that hosts it and the users for whom it is intended.

Navigation: As discussed previously, the science information life cycle also informed the bulk of our revisions relating to navigation. It provides a basic organizational structure and reinforces the connection between different topics. In addition to the cycle, a table of contents appears on the left side of the screen and tabs for individual modules appear at the top. We recommend that for any tutorial made up of individual units such as this one, navigation should be both flexible and easy to follow. Users should be able to find what they want at any time without getting lost, while also observably making progress through the tutorial.

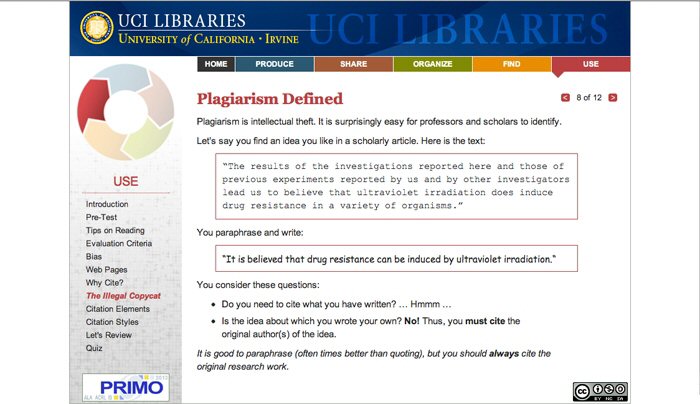

Content: The science information life cycle also determines which areas of IL to focus on. There had been a few informational gaps in the original tutorial that we aimed to address in its second iteration: greater detail about plagiarism and how to avoid it; identifying primary sources in the sciences; and open access information. Studies have shown that students found identifying science primary sources to be difficult (Johnson et al. 2011; Shannon & Winterman 2012). While this is not a major focus area of the tutorial, we thought it relevant to at least introduce students to the idea that primary sources vary upon the context and that within the sciences, they focus on original research performed by the reporting scientists themselves.. User testing showed that students were well aware of campus policies concerning plagiarism, but were less certain about how to avoid it (Figure 2). With the new tutorial, we put plagiarism in the context of information use as students might encounter it (Figure 3). The updated version of the tutorial provides examples of material that needs to be cited and how it should be done. In particular, we emphasize the need for using citations when paraphrasing, which can be a confusing area for many students. Finally, students are most likely quite unfamiliar with open access (Warren & Duckett 2010). This is becoming an increasingly important part of scientific discourse with several funding agencies requiring it and more scholars demanding it. A light introduction to the concept seemed appropriate.

Figure 2: The Plagiarism page from the 2008 Find Science Information tutorial

Figure 3: One of three pages in the 2012 Find Science Information tutorial that deal with plagiarism

Another example of content that we expanded is the original Sources page, which presented one science database, two science journals, and three science subject guides (Fig. 4). Our usability test told us that students found it difficult to discern the focus for this page when it came to determining which source(s) to use for finding science information. To address this need in the revised tutorial, this information is split into two pages: an article databases page (Fig. 5) and a subject guides page (Figure 6). The databases page lists some of the most recognized science databases for the various disciplines and includes an activity to guide students into connecting the resources to a particular discipline. The subject guides page contains a more comprehensive list of the science and engineering guides (adding mathematics, physics, and astronomy to the list). On this page, Nature and Science are still highlighted because these are considered the most prestigious journals in the broad discipline of the sciences. We added a note to draw attention to librarians and our availability for help.

Figure 4: The Science and Engineering Information Sources page from the 2008 Find Science Information tutorial

Figure 5: The Science Databases page in the 2012 Find Science Information tutorial

Figure 6: The Science Subject Guides page in the 2012 Find Science Information tutorial

With additional modules, we also found it necessary to add more activities. These include drag-and-drop exercises where students are asked to supply missing words in phrases, match words with concepts, or arrange a series of concepts in correct order. We updated the module quizzes to address new and revised content. All modules contain similar activities for the sake of consistency.

Technology: Another crucial area to consider when updating a tutorial is the technology involved. The original tutorial was created entirely in Flash, which is incompatible with mobile devices, iPads, and other tablet devices. In order to ensure compatibility and greater accessibility, the revised tutorial does not use Flash. For the interactive components, Javascript is used (specifically jQuery and jQuery UI). While interactivity is retained, the animated text was removed since students did not respond well to it in testing. Additionally, with these technology changes, it is now possible to link to individual pages of the tutorial, which is useful for finding and reviewing specific information without having to proceed through an entire module. For example, librarians or course instructors can send students to a specific part of the tutorial with that page's URL.

Conclusion

All online tutorials will encounter a point when they will either need to be revised or retired. When the UCI Libraries decided its Find Science Information Tutorial needed to be revised, we took the opportunity not only to revise its content, but also to undertake a more comprehensive overhaul based on usability testing that addressed a number of needs and concerns. In particular, we wanted to make sure it was clear to undergraduate students - the tutorial's primary users - why and how the tutorial is relevant to them and their lifelong education.

We have proposed some questions to consider when evaluating a tutorial for revision and offered the answers we found through our revision process. Conducting a usability study to determine real students' needs and areas of interest/disinterest will provide insights as to where revisions can be made in an existing tutorial. We used the science information life cycle to ground and orient the student user to the larger scientific scholarly communication system, of which finding is just one part. We encourage readers to consider how the science information life cycle might be useful in their IL instruction or online tutorial.

The changes we made were largely based on the results of the initial usability testing. To properly measure the impact of these revisions, it would be ideal to follow up with another round of usability testing, now on the newly redesigned tutorial. Unfortunately, because the project exceeded the original allotted time and with changes in our own careers that took us both away from the UCI Libraries, we were unable to conduct this crucial second round of testing. We submit our results in the hope that they will still provide a useful example for others undertaking similar projects, but recommend that multiple rounds of usability testing be included in the original project outline.

While we did not address the incorporation of this science information tutorial into the student learning experience, we recommend implementing Scaramozzino's (2010) outreach strategy targeting specific undergraduate science courses. This approach ensures that the users most likely to benefit from the tutorial - undergraduates studying science - will use it. It can be completed outside class, making it easier to integrate with classes that do not have enough in-class instruction time to cover this material.

The creation of any library instructional tool should be considered an organic process, rather than a discrete undertaking with a beginning, middle, and end. Librarians, instructors, and, most importantly, students will all benefit from regular assessments of and updates to existing tutorials. We hope that other librarians and instructors can benefit from the process we used to update and add context to the Find Science Information tutorial.

The Find Science Information tutorial is available from the UCI Libraries web site at http://www.lib.uci.edu/how/tutorials/FindScienceInformation/public/. The tutorial is also available under a creative commons license for librarians to repurpose for their own institutions.

Acknowledgements

The authors wish to acknowledge gratefully the contributions made to the Find Science Information tutorial by Cathy Palmer, Jeannine Scaramozzino, Mark Vega, and Mathew Williams.

References

The ALA/ACRL/STS Task Force on Information Literacy for Science and Technology. n.d. Information Literacy Standards for Science and Engineering/Technology | Association of College and Research Libraries (ACRL). [Internet]. [Cited July 12, 2013]. Available from: http://www.ala.org/acrl/standards/infolitscitech

Anderson, S.A. & Mitchell, E.R. 2012. Life after TILT: Building an interactive information literacy tutorial. Journal of Library & Information Services in Distance Learning 6 (3-4):147-158.

Association of College and Research Libraries. Working Group on Intersections of Scholarly Communication and Information Literacy. 2013. Intersections of Scholarly Communication and Information Literacy: Creating Strategic Collaborations for a Changing Academic Environment. Chicago (IL): Association of College and Research Libraries.

Befus, R. & Byrne, K. 2011. Redesigned with them in mind: Evaluating an online library information literacy tutorial. Urban Library Journal 17(1):1-26.

Blummer, B.A. & Kritskaya, O. 2009. Best practices for creating an online tutorial: a literature review. Journal of Web Librarianship. 3(3):199-216.

Bury, S. & Oud, J. 2005. Usability testing of an online information literacy tutorial. Reference Services Review 33(1):54-65.

George, C.A. 2005. Usability testing and design of a library web site: An iterative approach. OCLC Systems & Services 21(3):167-180.

Huvila, I. 2011. The complete information literacy? Unforgetting creation and organization of information. Journal of Librarianship and Information Science 43(4):237-245.

Johnson, C.M., Anelli, C.M., Galbraith, B.J., & Green, K.A. 2011. Information literacy instruction and assessment in an honors college science fundamentals course. College & Research Libraries 72(6):533-547.

King, H.J. & Jannik, C.M. 2005. Redesigning for usability: Information architecture and usability testing for Georgia Tech library's web site. OCLC Systems & Services 21(3):235-243.

Klare, D. & Hobbs, K. 2011. Digital ethnography: Library web page redesign among digital natives. Journal of Electronic Resources Librarianship 23(2):97-110.

Li, P. 2011. Science information literacy tutorials and pedagogy. Evidence Based Library and Information Practice 6(2):5-18.

Nielsen, J. & Landauer, T.K. 1993. A mathematical model of the finding of usability problems. Proceedings of the INTERACT '93 and CHI '93 conference on human factors in computing systems. New York, (NY): ACM. p. 206-213. Available from: http://doi.acm.org/10.1145/169059.169166

Noe, N.W. & Bishop, B.A. 2005. Assessing Auburn University Library's tiger information literacy tutorial (TILT). Reference Services Review 33(2):173-187.

Palmer C., Booth C. & Friedman L. 2012. Collaborative customization: Tutorial design across institutional lines. College and Research Libraries News 73(5):243-248.

Reece, G.J. 2007. Critical thinking and cognitive transfer: Implications for the development of online information literacy tutorials. Research Strategies 20(4):482-493.

Rubin, J. & Chisnell, D. 2008. Handbook of Usability Testing. 2nd ed. Indianapolis (IN): Wiley Publishing, Inc.

Scaramozzino, J. 2008. An undergraduate science information literacy tutorial in a web 2.0 world. Issues in Science and Technology Librarianship 55. [Internet]. [Cited July 12, 2013]. Available from: http://www.istl.org/08-fall/article3.html

Scaramozzino, J.M. 2010. Integrating STEM information competencies into an undergraduate curriculum. Journal of Library Administration 50(4):315-333.

Shannon, S. & Winterman, B. 2012. Student comprehension of primary literature is aided by companion assignments emphasizing pattern recognition and information literacy. Issues in Science and Technology Librarianship 68. [Internet]. [Cited July 12, 2013]. Available from: http://www.istl.org/12-winter/refereed3.html

U.S. Department of Health and Human Services. Templates. [Internet]. [Accessed September 23, 2011]. Available from: http://www.usability.gov/templates/index.html

Valentine, B. & Nolan, S. 2002. Putting students in the driver's seat. Public Services Quarterly 1(2):43-66.

Vaughn, D. & Callicott, B. 2003. Broccoli librarianship and Google-bred patrons, or what's wrong with usability testing? College & Undergraduate Libraries 10(2):1-18.

Warren, S. & Duckett, K. 2010. "Why does Google Scholar sometimes ask for money?" Engaging science students in scholarly communication and the economics of information. Journal of Library Administration 50(4):349-372.

Weiner S.A., Pelaez N., Chang K. & Weiner J. 2011. Biology and nursing students' perceptions of a web-based information literacy tutorial. Communications in Information Literacy 5(2):187-201.

Appendix - Usability Testing Script for the Find Science Information Tutorial

Find Science Information Tutorial Usability Testing - Script (PDF)