Using Evidence in Practice

Improving Reference Service with Evidence

Bonnie R. Nelson

Professor and Associate Librarian for Information

Systems, Lloyd Sealy Library

John Jay College of Criminal Justice, City University

of New York

New York, New York, United States of America

Email: bnelson@jjay.cuny.edu

Received: 19 Nov. 2015 Accepted:

25 Jan. 2016

![]() 2016 Nelson.

This is an Open Access article distributed under the terms of the Creative

Commons‐Attribution‐Noncommercial‐Share Alike License 4.0 International (http://creativecommons.org/licenses/by-nc-sa/4.0/),

which permits unrestricted use, distribution, and reproduction in any medium,

provided the original work is properly attributed, not used for commercial

purposes, and, if transformed, the resulting work is redistributed under the

same or similar license to this one.

2016 Nelson.

This is an Open Access article distributed under the terms of the Creative

Commons‐Attribution‐Noncommercial‐Share Alike License 4.0 International (http://creativecommons.org/licenses/by-nc-sa/4.0/),

which permits unrestricted use, distribution, and reproduction in any medium,

provided the original work is properly attributed, not used for commercial

purposes, and, if transformed, the resulting work is redistributed under the

same or similar license to this one.

Setting

John Jay College of

Criminal Justice is a senior college of the City University of New York (CUNY),

serving a student population of approximately 11,000 FTE (full-time equivalent

students). While John Jay now offers majors in a variety of fields, traditionally

our focus has been on criminal justice, public management, forensic science,

and forensic psychology. Our motto

remains “Educating for Justice.” Our students are typical graduates of New York

City public schools, who often find the idea of writing a research paper using

academic resources to be a challenge. John Jay’s reference librarians aim to

help students meet that challenge with library instruction in selected classes

(especially first-year writing classes and research methods classes), outreach to

faculty, and by providing reference desk service every hour the library is

open. The Lloyd Sealy Library has a

print collection selected to meet the needs of an undergraduate population as

well as a research-level collection in criminal justice that serves the needs

of a doctoral program and researchers around the world. Our online resources

are very strong for a public college our size, owing to long-standing

cooperative arrangements among CUNY libraries and support from the CUNY central

office.

Problem

In December 2014, the

Library Department Assessment Committee met to review the longitudinal

statistics we had been maintaining as part of our participation in both the

ACRL annual and ALS biennial library statistics reporting programs. The Library

faculty of John Jay College of Criminal Justice have always taken these

measures very seriously and been as assiduous as possible about maintaining

both accuracy and consistency in counting methods. As a result, we felt we had

fairly reliable numbers going back more than 20 years. We met to review these

numbers to see what they could reveal about the work we had been doing and

where we could improve.

Many of the trends

over this 20-24 year period were expected from our knowledge of the history of

John Jay College and general library trends: John Jay College’s full time

equivalent (FTE) student numbers increased dramatically before leveling off and

then dropping slightly; circulation of materials from the general collection

declined as electronic journals and e-books became commonplace; both collection

and total expenditures increased over time, but decreased when inflation was

factored in and even more so on a per student FTE basis. The Library’s gate

count numbers, however, were somewhat erratic, most likely fluctuating in

response to the use of space elsewhere in the College that resulted in more or

less free space for students to study. But the gate counts never showed a

serious decline in use and informal observation confirmed that the Library

continued to be a popular place for students to study alone or in groups, with

students sometimes sitting on the floor at the height of the semester.

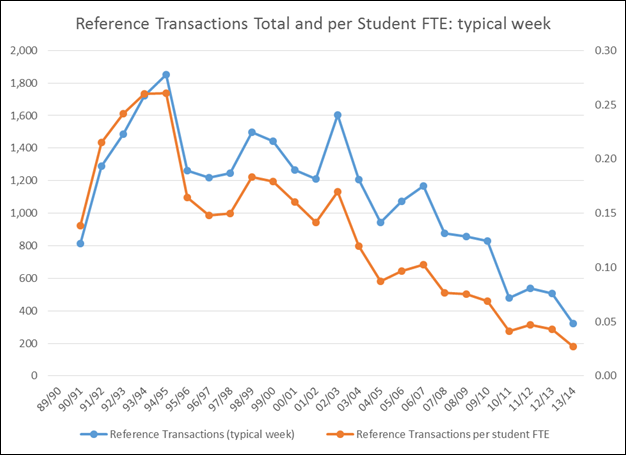

The most troubling and

glaring trend observed by the Assessment Committee was the long-term, steep

decline in the number of reference questions asked. The decline was in absolute

numbers, as well as in questions per FTE student (see figure 1). The Sealy

Library faculty had often discussed the proper staffing of the Library Reference

Desk, prioritizing this service as perhaps the single most important way to

help students succeed, but these numbers made us question the wisdom of staff

hours devoted to reference service. Experienced reference librarians pointed

out that although the questions were fewer in number, they tended to be

complicated and required more time to sort through the students’ needs. Still

the decline in numbers was so steep and troubling that it became the one

statistic the Assessment Committee chose to focus on (see Figure 1).

Figure 1

Decline in reference transactions

A month-long

discussion ensued involving the entire Library Department. Several librarians

noted that the library literature indicated that the decline in reference

questions was ubiquitous in academic libraries

Evidence

Two recent changes in

statistics collection made it possible to measure the effectiveness of our

efforts. First, after years of relying on a “typical week” mode of counting

reference questions, in August 2013 we had switched to a locally-developed,

simple means of counting every reference transaction. This was developed

primarily as a means to evaluate how fully to staff the reference desk, but it

also allowed us to see what kinds of questions we were getting and other

trends.

Secondly, in September

2014 we re-instituted a chat reference service using LibraryH3lp (Nub Games https://libraryh3lp.com/). The Sealy Library had previously used

QuestionPoint (OCLC http://www.questionpoint.org/), but dropped the service after concluding

that it provided insufficient benefits to our students. For the previous few

years we had been relying on email reference and infrequently-used texting to

service off-campus users. After our disappointment with the earlier chat

experience, the new chat service was launched with muted expectations but a

desire to provide online reference service to students in John Jay’s first

online master’s degree program, which also started in September 2014. We were

able to provide the new service during peak hours of reference desk use, from

Monday-Thursday, 11:00a.m. – 5:00p.m. We announced the new service in our

Library news blog, and added a chat widget to both the Library home page and to

the “Ask a Librarian” page. Otherwise we did not publicize the service.

LibraryH3lp provides excellent statistics on duration of chat, IP address of

questioner, and URL of the page where the chat initiated.

Looking at reference

statistics in isolation, however, would not necessarily provide a complete

picture. The number of reference questions asked is also related to the number

of FTE students, the number of classes we teach (since those students tend to

be heavy library users), and the number of students entering the library, among

other things, so we needed to look at reference questions in relation to the

other statistics we keep.

Implementation

We took several steps

to try to encourage the asking of more reference questions.

To increase in-person

reference:

- Signage identifying the reference desk was

reviewed and improved

- Reference librarians were encouraged get up from

behind the desk and walk around to be more approachable

- Reference librarians were encouraged to actively

approach students who looked like they might need help

- Student staff at the circulation, reserve, and

library computer lab desks were reminded to refer patrons needing help to

the reference desk

To increase chat

reference, we:

- Added four chat hours per week, from 5:00p.m. to

6:00p.m. Monday-Thursday

- Added a chat widget to our EZproxy login error

page

- Added a chat widget to the results page in all

our EBSCOhost databases

- Added a link to our “Ask a Librarian” web page

(where a chat widget is located) on ProQuest databases

- Added a chat widget to some of our LibGuides

Outcome

A review of reference

statistics at the end of the Spring 2015 semester[i]

indicated that our interventions were successful (Table 1).

Without implementing

our chat reference service in Fall 2014, total reference questions asked from

Fall 2013 to Fall 2014 would have continued their long-term decline; the

addition of chat reference reversed that by a very modest .96%. However, we

took more aggressive steps for Spring 2016 (discussed above) and the number of

reference questions asked increased by

nearly 11% compared to the previous Spring. Even without the chat service, the

increase would have been a respectable 5%.

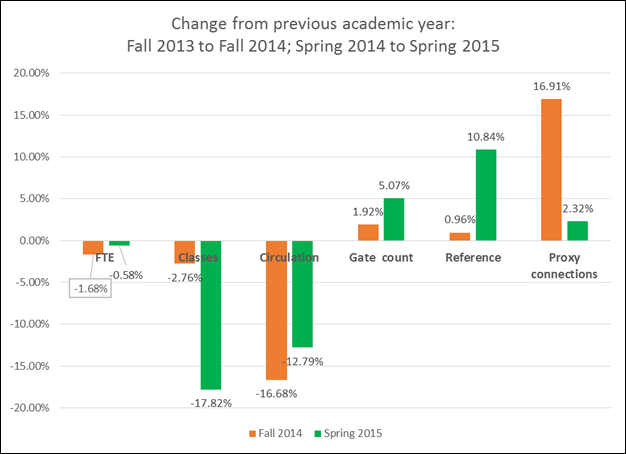

A look at the other

statistics we keep indicated that such an unexpected increase in usage was not

reflected elsewhere (Figure 2).

From Spring 2014 to Spring 2015 there was actually a small

decrease in the number of students at John Jay. There was a sharp decline in

the number of library instruction classes taught, the usual driver of students

to the reference desk. There was a 5% increase in the number of users passing

through our security gates. However, in prior years, when we used the “typical

week” method of estimating usage statistics, there was little relationship

between gate count and reference questions. In those years gate counts went up

and down, but reference questions consistently dropped. Use of our electronic

resources, as measured by proxy server connections, showed a much bigger

increase in Fall than in Spring.

Table 1

Change in reference

transactions, 2013/14 to 2014/15

|

Total reference

questions |

Chats |

Total without chat |

Change with chat |

Change without chat |

|

|

Fall 2013 total |

5744 |

5744 |

|||

|

Spring 2014 total |

4547 |

4547 |

|||

|

Fall 2014 total |

5799 |

167 |

5632 |

||

|

Spring 2015 total |

5040 |

250 |

4790 |

||

|

Change fall 2013 to

fall 2014 |

0.96% |

-1.95% |

|||

|

Change spring 2014

to spring 2015 |

10.84% |

5.34% |

|||

Figure 2

Change in library

activity: by Fall and Spring semesters

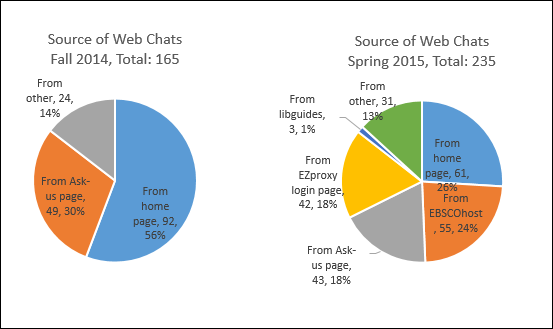

Figure 3

Source of web chats:

Fall 2014, Spring 2015

Reflection

The effectiveness of

both our traditional and our chat interventions needed to be examined. The chat

question was fairly easily answered by looking at the source of the chats, as

shown by our LibraryH3lp logs (Figure 3).

Whereas the source of

over half of our web chat sessions in the Fall was our home page, in the

Spring, after adding additional chat access points, the home page accounted for

only 26% of our chats while 43% of our chats came from these new sources. Also,

13% of our chat sessions came from the hour added between 5:00p.m. and 6:00p.m.

It should be noted that we did not do any additional publicizing of the chat

service, although word of mouth and repeat users may account for some of the

increase. Randomly selected chat transcripts confirmed that the questions

coming from EBSCOhost were indeed questions from users confused about how to

search for information, or how to interpret what they were finding. This

insight, along with the increased usage, confirmed what we believed to be true:

that we are improving our services to students by adding our chat widget to all

possible locations.

Ironically, in

mid-Fall 2015, after this study, we realized that most of the questions coming

from the chat widget on the EZproxy login error page were from students

incorrectly entering their usernames. We have attempted to revise our login

pages to eliminate confusion. If we succeed we will improve service but reduce

our chat counts. This is a paradoxical result but a reminder that the numbers

we collect can never tell the full story.

Whether or not we were

successful in our attempts to increase in-person reference was less clear. A 5%

increase in in-person reference questions asked (over the previous Spring)

would have been unlikely had it not been for the proactive approach on the part

of the librarians, particularly in light of the sharp decrease in library

instruction classes. But it was certainly possible that our department-wide

discussion of reference statistics resulted in more assiduous recording of the

activity, rather than greater efforts to engage our students. To attempt to

answer this question, the writer asked all John Jay reference librarians to

fill out a simple two-question survey, asking whether they were aware that we

were trying to increase the number of reference questions asked and whether

they had changed their behavior in any way in order to elicit more

questions.

Out of 19 reference

librarians, 15 responded. Ten were aware of the program, but seven librarians

felt they did nothing different last spring and five said that they recorded

the reference questions more assiduously. However, three said that they walked

around the reference area to be more approachable and six said that they

directly addressed students who looked like they needed help. In comments, two of the librarians indicated

that better signage might have been the primary reason for any increase in the

number of reference questions. It is clear that at least some of the reference

librarians took actions that resulted in more students getting the help they

need.

Conclusion

We found that a

decrease in the number of reference questions is not inevitable and that both

in-person and remote questions will increase if librarians reach out to connect

to users where they are, whether sitting in the library being puzzled or

working at home with a database they find confusing. This conclusion seems

obvious and almost trite, but it was only by looking at the evidence of decreasing

reference use that we were motivated to make changes. And, hopefully, seeing

how effective these actions have been will encourage us to expand on these

changes even further.

References

Green, D. (2012). Supporting the Academic Success of

Hispanic Students. In L. Duke, & A. Asher, College libraries and

student culture: what we now know (pp. 87-89). Chicago: American Library

Association.

Miller, S., & Murillo, N. (2012). Why don't students ask

librarians for help? Undergraduate help-seeking behavior in three academic

libraries. In L. Duke, & A. Asher, College libraries and student

culture: what we know now (pp. 49-70). Chicago: American Library

Association.

Stevens, C. (2013). Reference Reviewed and re-envisioned:

Revamping librarian and desk-centric services with LibStARs and LibAnswers. The

Journal of Academic librarianship, 39(2),

202-214. http://dx.doi.org/10.1016/j.acalib.2012.11.006h